Copyright © 2008-2009 OW2 Consortium

This work is licensed under the Creative Commons Attribution-ShareAlike License. To view a copy of this license,visit http://creativecommons.org/licenses/by-sa/2.0/deed.en or send a letter to Creative Commons, 559 Nathan Abbott Way, Stanford, California 94305, USA.

- Preface

- 1. Introduction

- 2. Principles

- 3. Cluster configuration

- 4. Cluster and domain management

- 5. Tooling

- 6. Examples

- 7. Troubleshootings

- A. Appendix

At first, this guide gives an overview of the Java EE clustering principles. Afterwards it describes how to set up clustering in the JOnAS application server and how to manage it. Finally some tools and examples are presented.

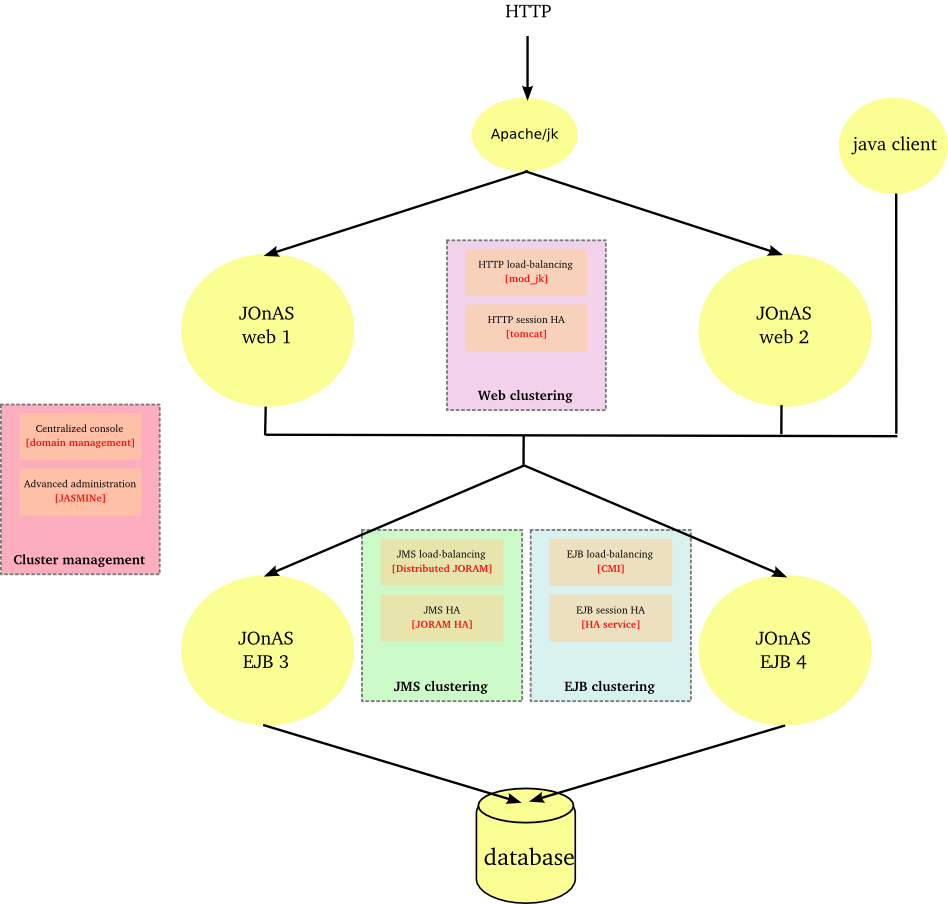

JOnAS provides an end to end solution for clustering that ensures transparent distribution, high availability and scalability of Java EE applications.

-

At the web level through

Apache/mod_jk or Apache/mod_proxy_balancer for load-balancing the HTTP flow between multiple JOnAS/Tomcat instances.

Tomcat for providing a TCP-based solution of HTTP session replication, ensuring their high availability

At the JNDI level through CMI and its replicated registry

At the EJB level (EJB2 and EJB3 support) through CMI and its cluster proxy

At the JMS level through JORAM server and JORAM HA

At the management level through the domain management feature and the JASMINe project.

|

A farm is a set of similar JOnAS nodes gathered for ensuring scalability and a first level of availability.

A cluster is a set of similar JOnAS nodes gathered for ensuring high availablity.

![[Note]](../../resources/images/note.png) |

Note |

|---|---|

|

note: the |

A replication domain is a subset of a JOnAS cluster which determines the replication boundaries.

The concept ensures the scalability of the replication when dealing with a large number of nodes.

A JOnAS node or JOnAS instance is an autonomous server with its

own configuration and running in a dedicated JVM. Several JOnAS nodes may lie in the same

virtual or real system.

A JOnAS domain gathers a set of JOnAS nodes under the same administration authority.

A domain is defined by a name and the JOnAS nodes belonging to a domain must have an unique name within this domain.

A master node is a JOnAS node of a domain dedicated to the administration. It enables to

manage the JOnAS instances of a domain from a centralized point. Several master nodes can be defined within a domain.

The scalability of a system lies in its capacity to evolve in power by adding or removing software and hardware elements without impact on its architecture.

The scalability aims at adjusting the system bandwidth to fit with the performance needs of the service. It is achieved by replicating the resources within a farm according to the input requests load.

Farming provides a first level of availability: when a resource failure occurs, the request will be submitted again (fail-over mechanism) and will be routed towards another resource. In this case, the failure is not transparent to the user whose request is processed by the failed resource. But the service is not interrupted as the user can restart its operation. For the users whose requests are processed by other resources within the farm, the failure will be transparent and the only impact will be the response time due to load distributing among a smaller number of resources.

The high availability of a system lies in its capacity to serve requests under heavy load and despite any component failure.

A highly available architecture contains some replicated elements. The number of replicas determines the number of simultaneous failures the system is able to tolerate without interruption of service. According to the fail over implementation, the service may be damaged during a short period. This service malfunction may impact users at different levels.

Replication mechanisms contribute to the continuity of service and enable to mask the resources failures to the users (or at least to minimize their visibility). Such mechanisms do impact the system sizing as they consume more resources or for a same sizing do impact the global performance.

Ensuring the scalability of a web application

Improving the availability of a web application (without session replication)

HTTP farming consists in distributing the processing load across N JOnAS instances. The same application is deployed all over the cluster and a request may be processed by any instance.

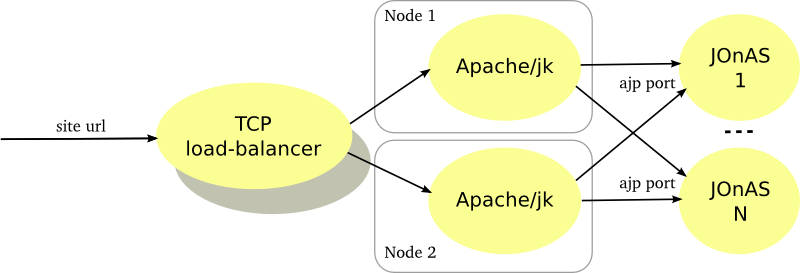

A load-balancer is setup in front of the JOnAS instances. The intermediate device handles the site url in order to mask the JOnAS instances multiplicity to the users. The load-balancer supports the HTTP session affinity in order to route a client towards the server hosting its session.

A load-balancer may be a software or a hardware device or a mix of two. The application server instances may be collocated on a same machine or may be distributed across several machines.

The intermediate device may be duplicated for avoiding a SPOF.

Hereinafter the figure illustrates a HTTP farm composed of a L4 switch, 2 Apache servers and N JOnAS instances.

|

The Apache server is configured with the mod_jk plugin for ensuring the load-balancing between the JOnAS instances and the session affinity through the AJP protocol. Furthermore mod_jk supports the failover when a server crashes. The Apache server may be used for caching the static pages, performing compression or encryption and, thus, can reduce the application servers load.

![[Note]](../../resources/images/note.png) |

Note |

|---|---|

|

From Apache 2.2, mod_proxy_balancer is alternative to the mod_jk plugin. It supports AJP protocol but also HTTP and FTP. |

The number of JOnAS instances must be determined according to the expected performance. The Apache server being a SPOF, it should be duplicated and a network device distributes the load at the TCP level between them. Of course the network device should be replicated as well (primary/backup mode).

HTTP farming is very common and widespread. It fits well with the more frequent requirements in terms of scalability and availability. Furthermore, this architecture pattern can be used for implementing a cluster of web service providing that the web service is standard and is stateless.

When the application server tier gets a bottleneck, this architecture enables to improve the performance by adding some JOnAS instances.

The application server may be unstable, in particular under heavy load. This architecture enables to improve the availability by reducing the impact of failures. However the failures are not transparent to the users who are connected to the in-fault server since they lose theirs sessions. To be note that after a crash, the load is distributed between the survivors servers what may impact the performance.

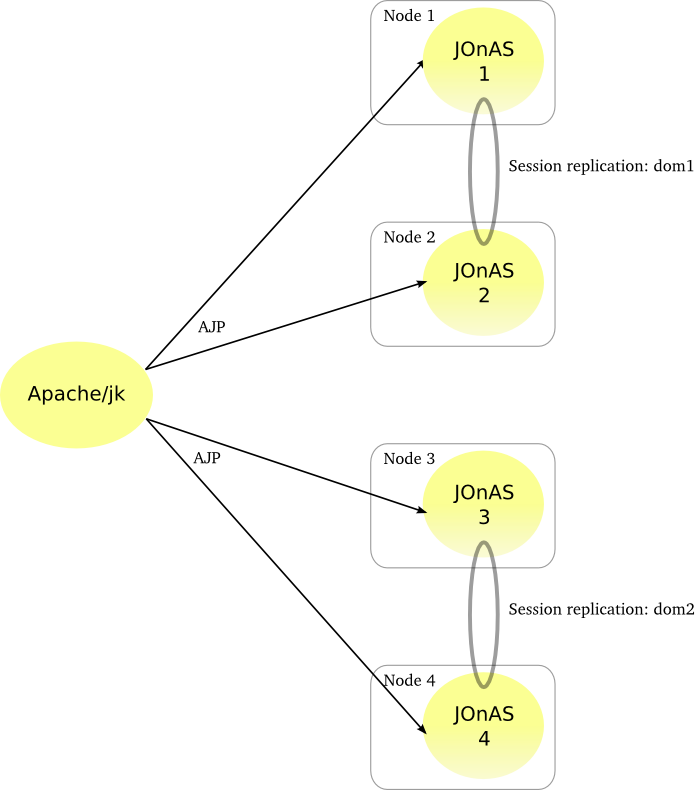

HTTP clustering is used when service continuity of web application is expected and when failures must be transparent for the users. It consists in replicating the HTTP sessions on P JOnAS instances among N.

The session context is replicated across a replication domain. Domain members are met through a multicast based protocol. The session replication takes place through point to point protocol (TCP based) and the replication mode can be synchronous (the user response is sent after the replication) or asynchronous (the user response is immediate and the replication is delayed).

Hereinafter the figure illustrates cluster with 4 JOnAS instances behind an Apache server. Requests are load-balanced across the 4 instances . The jk connector handles the session affinity and ensures that all the requests related to one session are routed towards the same instance. 2 replication domain are defined and each session is replicated on 2 JOnAS instances hosting by 2 different nodes for ensuring not to lose a session when a crash node occurs.

|

The Apache server can be duplicated for avoiding a SPOF.

Such a configuration lies both in the Apache/jk elements (related to the load-balancing) and in the JOnAS instances (related to the session replication).

At the jk level, a load-balancer worker is defined with 4 AJP workers. Session affinity is set and two domains are configured.

At the JOnAS level, the

tomcat6-server.xmlfile defines an AJP connector, a jvmRoute attribute set to a hosting domain and a cluster element for the replication. To be noted that the web application meta-data must be defined as distributable through thedistributableelement.

![[Note]](../../resources/images/note.png) |

Note |

|---|---|

|

From Apache 2.2, mod_proxy_balancer is alternative to the mod_jk plugin. It supports AJP protocol but also HTTP and FTP. |

HTTP clustering must be used only when the HTTP session loss is not acceptable. A server failure is visible only for the connected users who have an on-going request processed by the failed instance. The fault will be transient, the request will be sent towards another server which hosts a backup of the session.

The HTTP session replication impacts the performance and causes an overhead on the system resources consumption (memory. network, cpu). Moreover, the replication in a synchronous mode induces an extra latency.

For reducing the performance impact, it is recommended to use a dedicated network interface for the HTTP session replication (separated from the AJP requests) and to limitate the replication across 2 JOnAS instances (replication domain).

EJB farming aims at providing:

scalability of the EJB tier

availability of the EJB tier (without session replication)

EJB2 and EJB3 farming are supported by JOnAS.

The EJB tier duplication is not visible for the client.

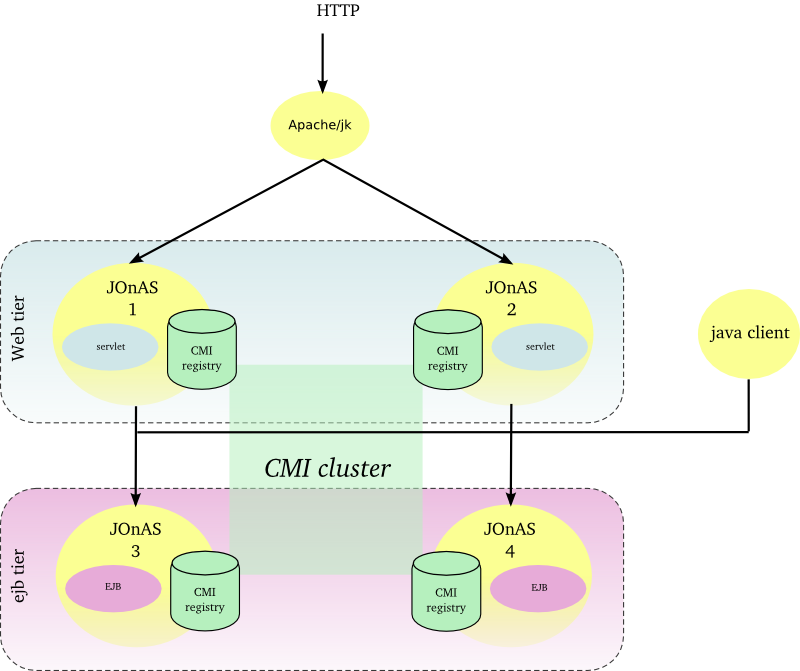

EJB farming relies on the CMI component which enables clustering on top of different RMI protocols such as jrmp, iiop or irmi.

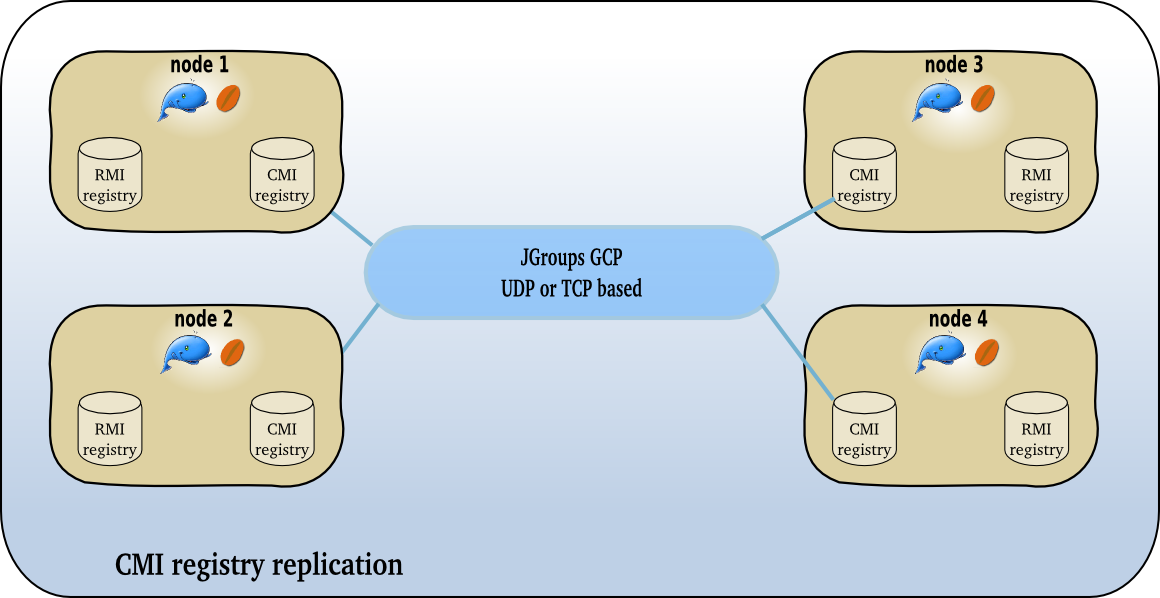

When a client program intends to access an EJB, it invokes a JNDI registry lookup for getting an EJB home or EJB remote proxy. In an EJB farm, the CMI registry is replicated in order to provide a cluster view to the client. The cluster view represents a a list of JOnAS nodes hosting the EJB with additional clustering meta-datas. The replication and the group membership rely on the JGroups's group communication protocol. The cluster view is updated dynamically through a control channel and a dedicated control thread in the client JVM. The CMI client proxy ensures the load-balancing and the fail-over of the EJB invocations.

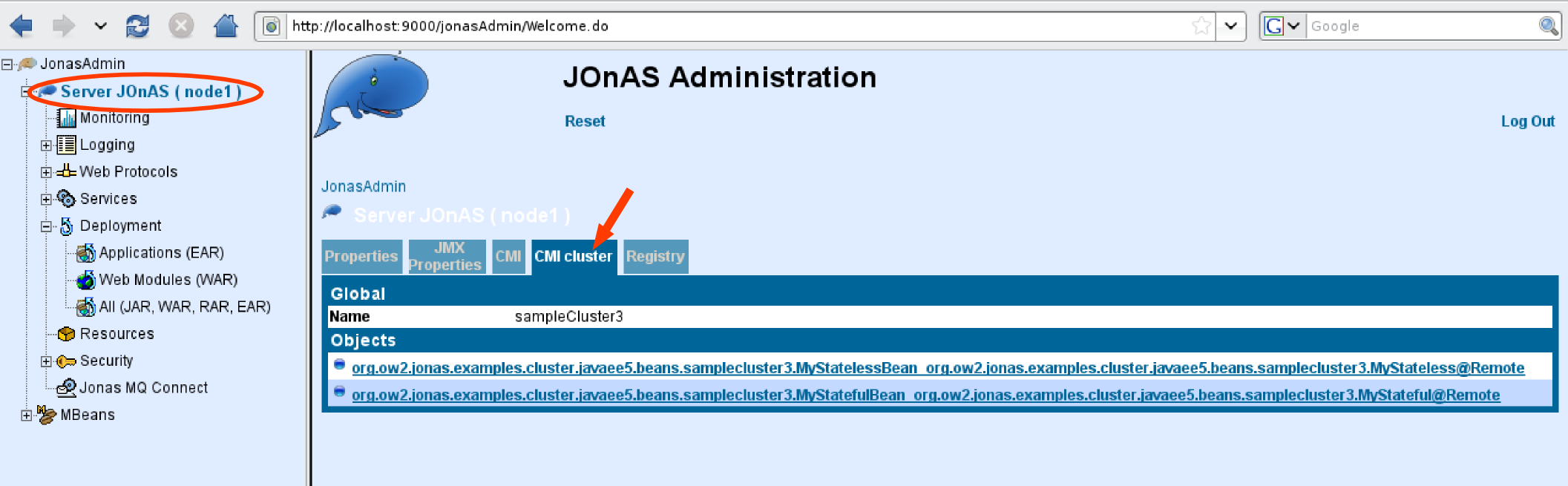

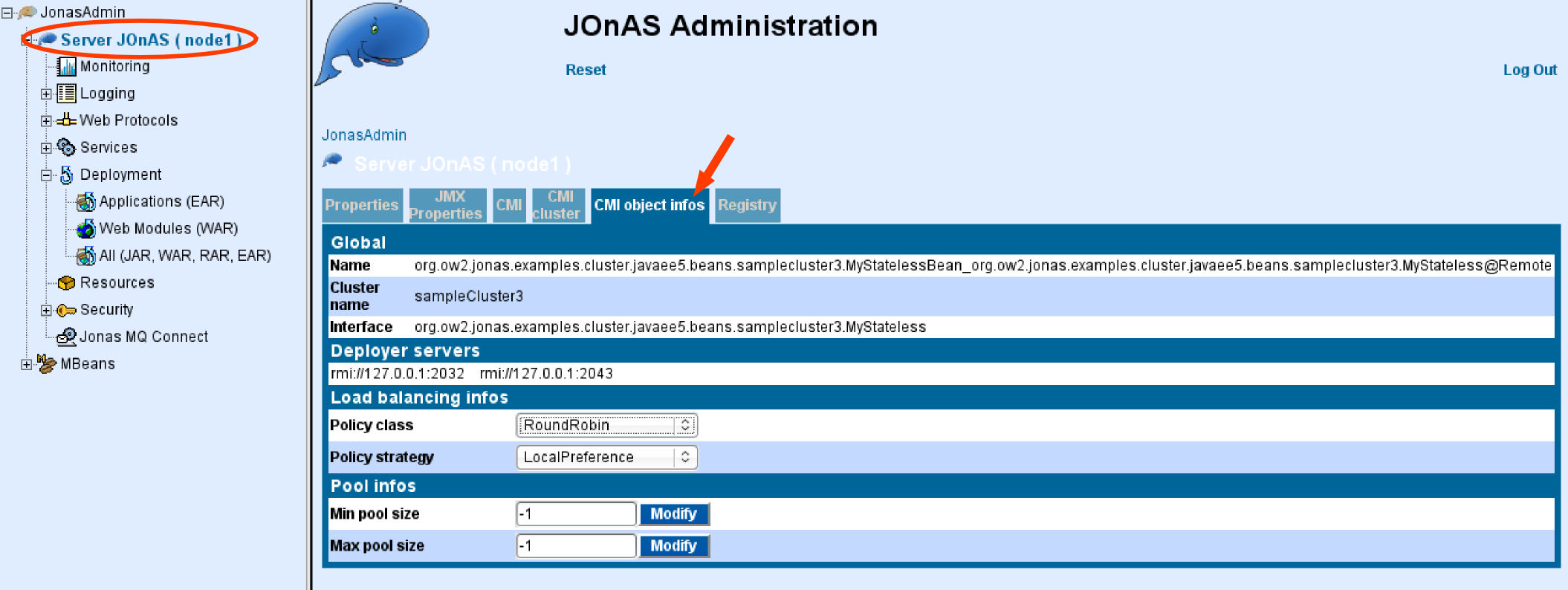

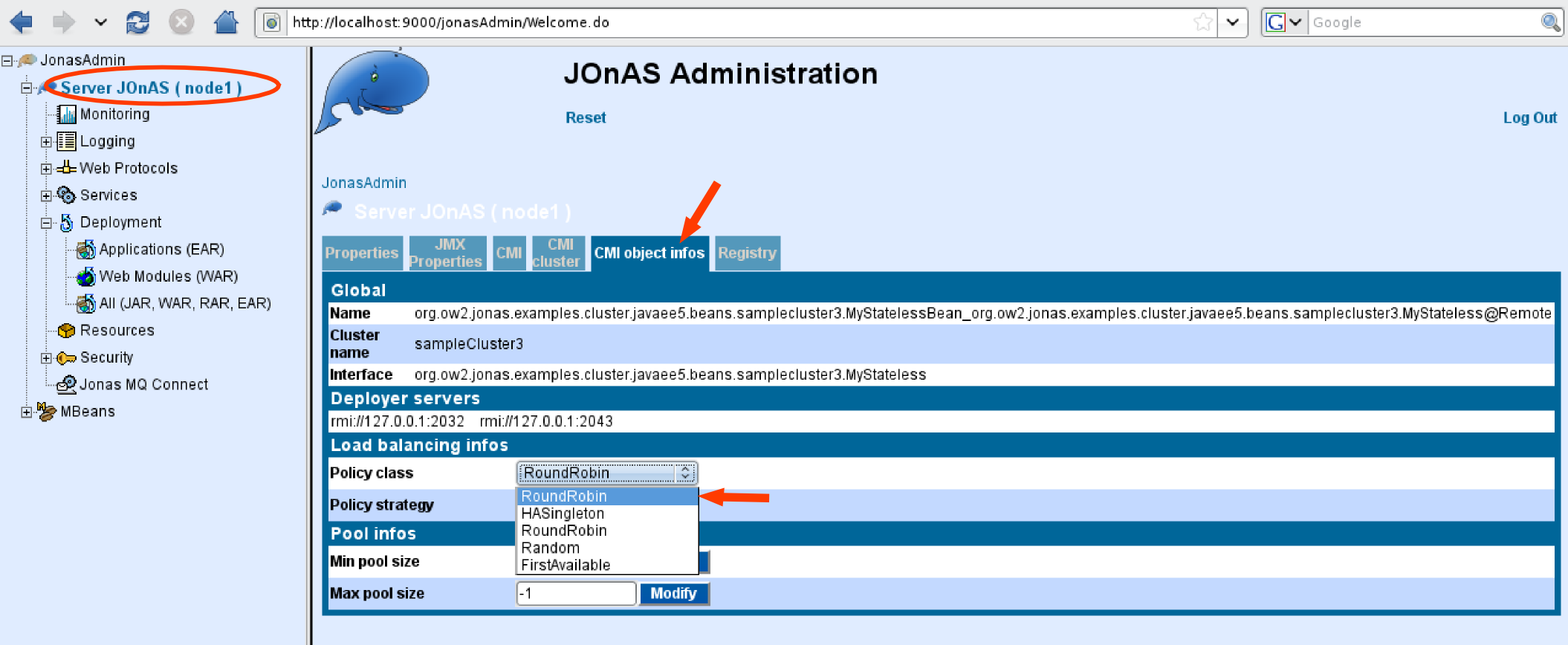

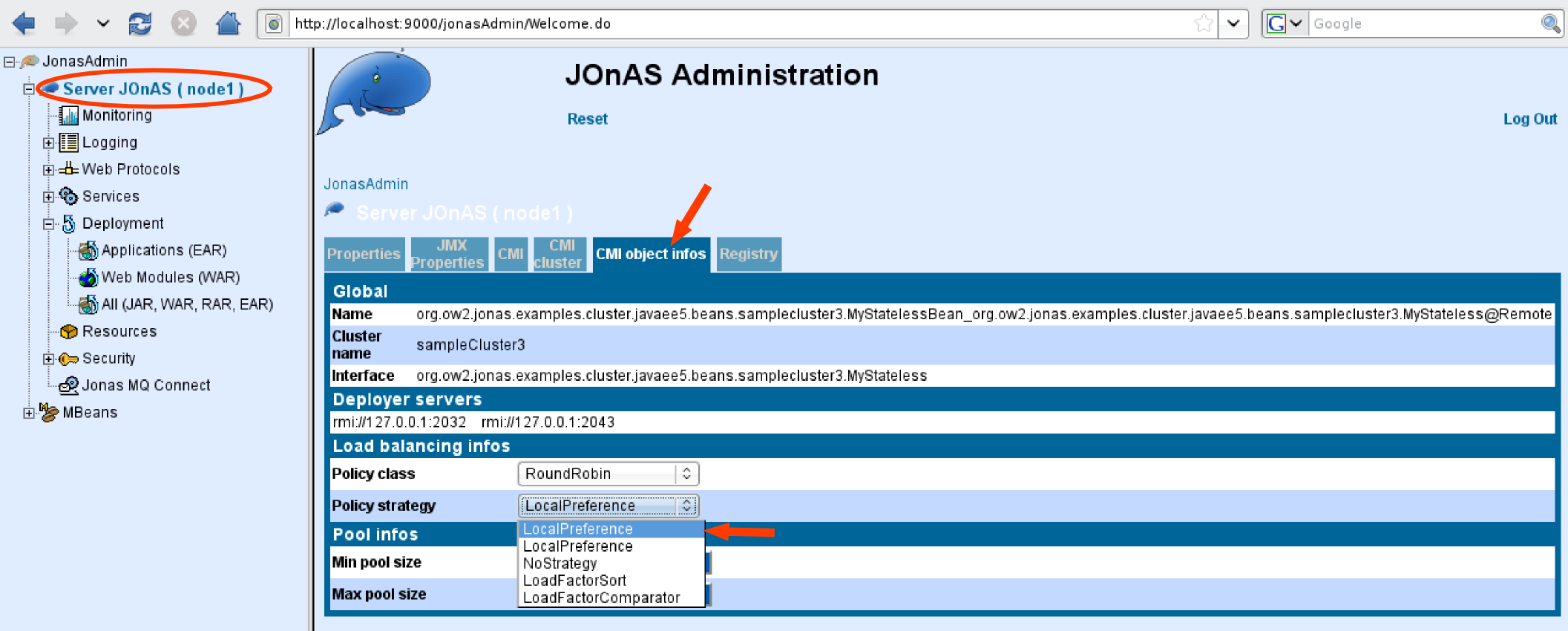

In the clustering meta-datas, the cluster logic defines both the load-balancing and fail-over algorithms. The cluster logic can be customized. A set of default policies are provided with CMI: round-robin, first available, random, etc... For each one, a strategy can be added, for example, local preference (for dealing with the collocated mode) or weighted round-robin (for adding a weighting).

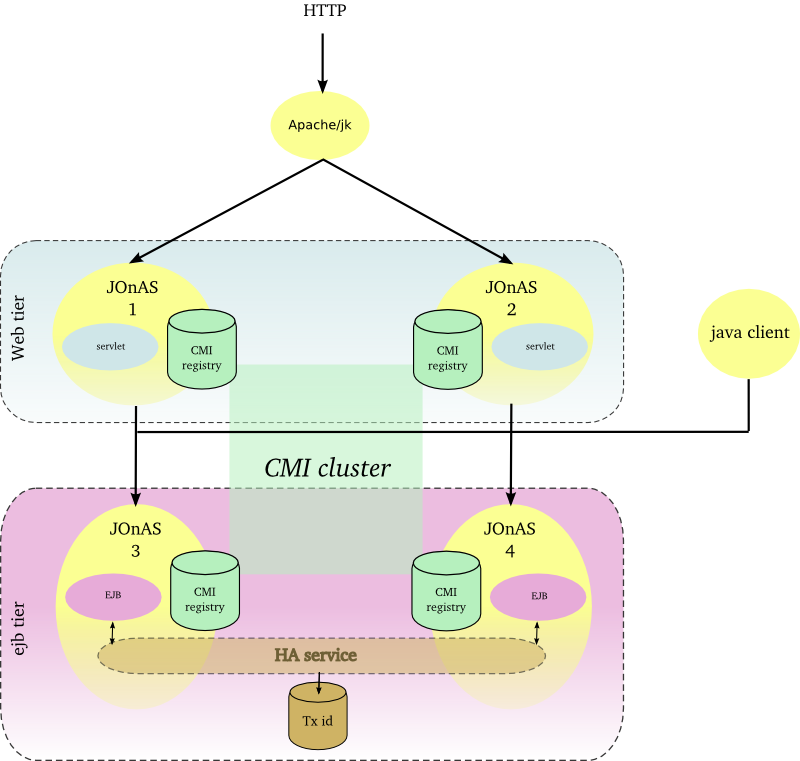

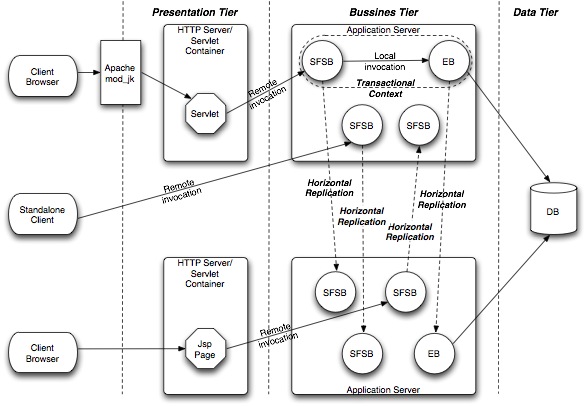

Hereinafter the figure illustrates a EJB farm in a 2 tier architecture with both web client and java client.

|

The Apache server is configured with the mod_jk plugin for ensuring the load-balancing between the JOnAS instances and the session affinity. Two JOnAS instances (1-2) host the presentation layer and are configured with a servlet container. Two JOnAS instances (3-4) host the business layer and are configured with an ejb container. The CMI registry is replicated across the cluster members in order to share informations about EJBs deployment and topology. Both servlet and java clients do access to EJB and do benefit from load-balancing and availability. In the case of a SFSB (stateful session bean), the EJB is created only in one instance and the state is lost if the JOnAS instance crashes, state replication is addressed in Section 2.2.8, “EJB cluster”.

The JOnAS instances number must be determined according to the expected performance.

EJB distribution aims at deploy a JavaEE application which is distributed on many JOnAS instances.

EJB distribution also relies on the CMI component.

Without CMI, EJB needs to be modified to allow invocations on the remote EJBs, for example by setting the Context.PROVIDER_URL of the remote registries or by using the specific features of the IIOP protocol. With CMI, EJB can continue to access to the remote EJBs as if they were locally deployed.

EJB clustering is used when service continuity of ejb application is required and when any failure must be transparent for the users. It relies on the stateful EJB replication and manages the transaction in order to:

ensure that the replicated system do have a similar behavior of the not replicated one,

in case the client doesn't abort, the transaction is executed exactly once, and no more than once otherwise.

The EJB replication relies on

-

a mechanism for replicating the state of the EJBs and the business methods invocation return values. This mechanism is provided by the HA service and different implementations do exist (JGroups) or are under development (Terracotta, Pair replication);

a mechanism for marking the transaction in a persistent database. The information is used at the fail-over time to determine whether the transaction was aborted and whether the request must be replayed.

a smart proxy in charge of redirecting the client calls towards another replica when a failure occurs.

An EJB is replicated only if it is specified as replicated in the component meta-datas (annotation and/or deployment descriptor).

A stateful ejb is created on a JOnAS instance selected according to a load-balancing algorithm (default is round-robin). After the creation all the business methods invocations are routed to this JOnAS instance unless a failure occurs.

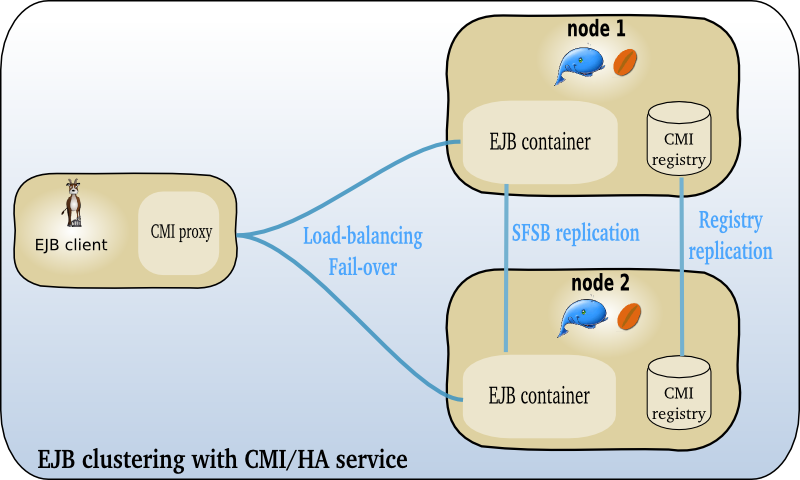

Hereinafter the figure illustrates a EJB cluster.

|

Each JOnAS instance of the EJB tier is configured with the HA service in charge of the session replication. CMI client API supports fail-over for both EJB2 and EJB3.

This architecture pattern is recommended when the application contains some stateful EJB and doesn't tolerate any session loss and any interruption of service. However the user must be aware that this kind of setting does impact highly the performance as the session is replicated between the JOnAS server at each method call.

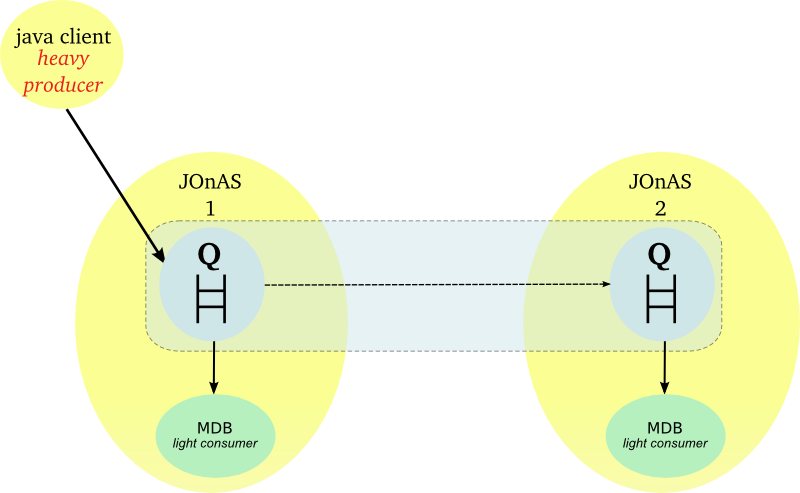

JMS Farming aims at:

ensuring the scalability of asynchonous applications,

improving the availability of asynchonous applications.

JMS farming consists in distributing the messages load across N JOnAS instances. The same application is deployed all over the cluster and a message may be processed by any instances.

JMS farming relies on the distribution capabilities of the JORAM product. Load-balancing can take place both at the server side or at the client side. When a server crashes, pending messages are lost unless a specific mechanism is set up at the disk level (such mechanisms are addressed in Section 2.2.10, “JMS cluster”).

At the server side, messages can be redirected towards other JOnAS instances when the load reaches a threshold.

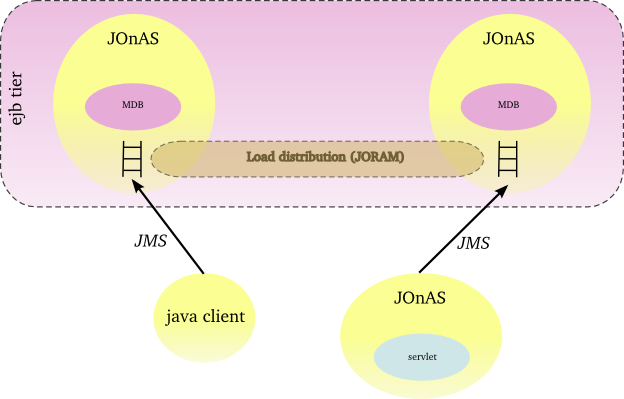

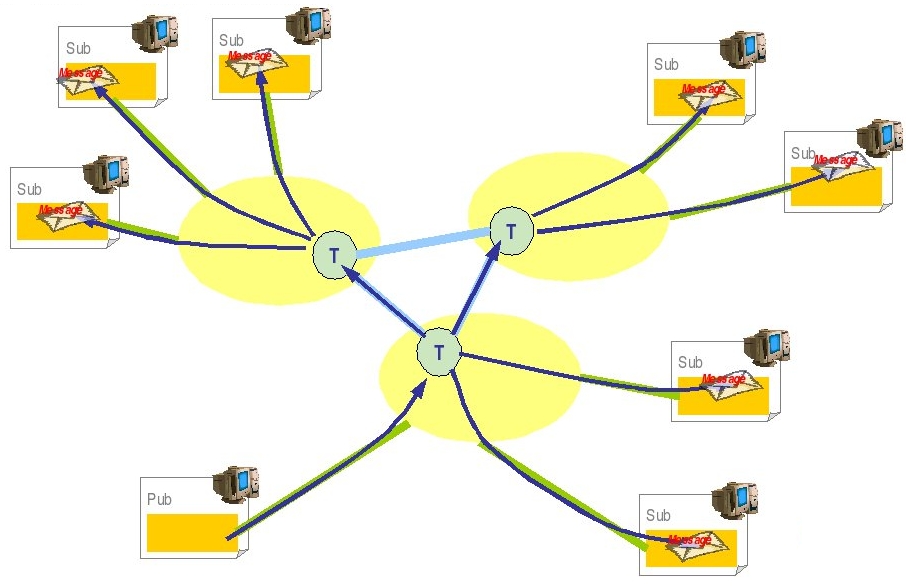

Hereinafter the figure illustrates a JMS farming with a distribution control at the server side.

|

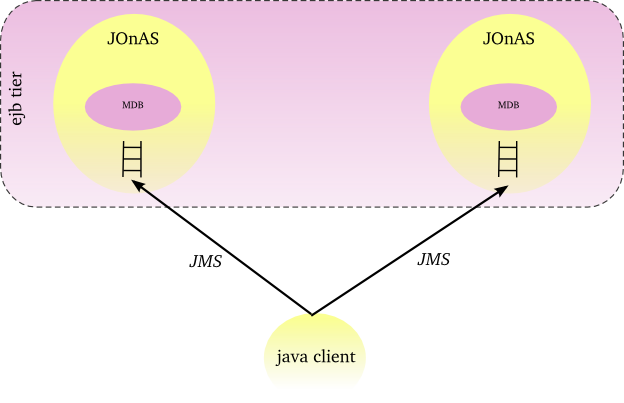

At the client side, messages can be distributed across a set of JOnAS instances according to a load-balancing algorithm (default is random).

Hereinafter the figure illustrates a JMS farming with a distribution control at the client side.

|

JMS farming fits well with the need of scalability and availability of the EDA (Event Driven Architecture) applications. When the application provides a JMS based asynchronous interface, the JORAM's clustering capabilities do enable to equilibrate the load either from the client side or from the server side. JMS farming is recommended when the message loss is acceptable and constitutes a good compromise in terms of performance and flexibility.

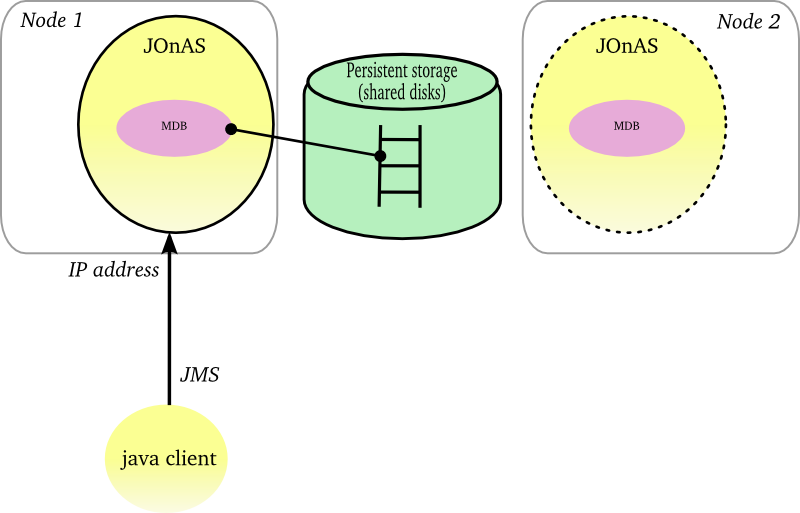

JMS cluster is used when the messages loss is not acceptable and when the interruption of service is not permitted.

Two solutions are available for ensuring the messaging high availability with JOnAS:

-

through the persistent mode of JORAM combined with some cluster features at the operating system level (NFS, HA/CMP product, Veritas product, ...). The HA OS must provide a HA network address (virtual ip address) which can be reallocated dynamically when a node failure occurs. JMS objects, topics and queues, must be stored on a shared disk space (external disk array or shared disk space as NFS). JORAM supports 2 storage types : file and database. When a JOnAS instance fails, the on-going messages are retrieved by a backup instance in the persistent storage, the virtual IP address is bound to the backup node and the messages are processed.

-

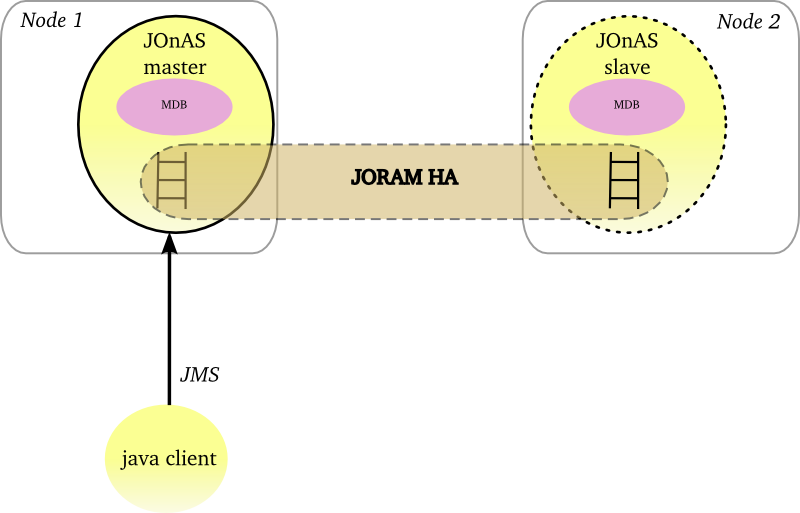

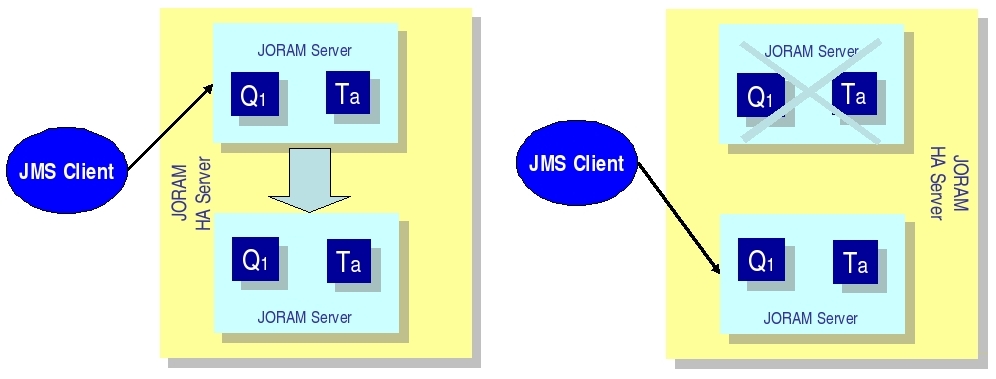

through JORAM HA concept delivered with the JORAM project. The mechanism relies on a Master/Slaves approach. At one point, only the master instance is active and all the JMS requests are replicated towards the slaves instances. The JMS replication is synchronous and implemented with JGroups.

This architecture pattern is recommended when the application doesn't tolerate any message loss and any interruption of service. Two mechanisms are available: the first one relies on operating system HA features and the JORAM's persistent mode whereas the second one relies on the native JORAM capabilities and a replication of the JMS requests. In case of the underlying OS provides HA features, the first solution is simpler in term of configuration and provides better performance. Otherwise JORAM HA solution can be considered and the user must pay attention to the performance impact.

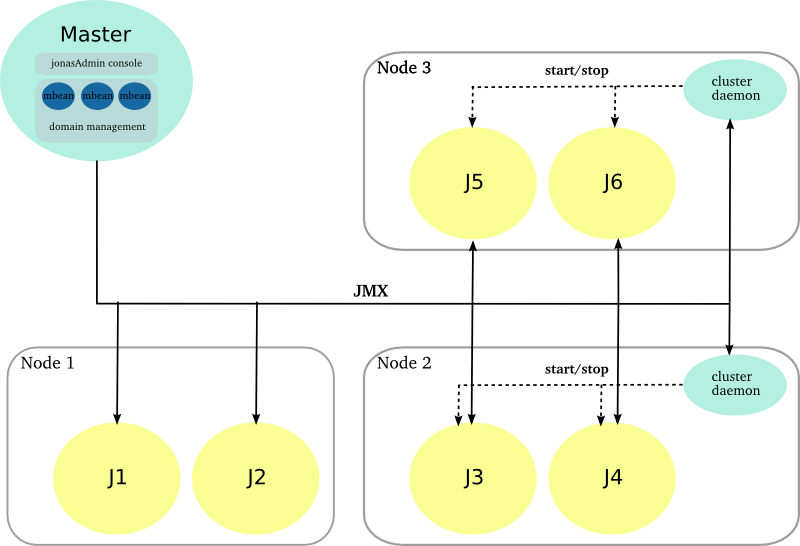

Each JOnAS instance can be managed through a local jonasAdmin console. The domain management enables to manage a set of JOnAS instances from a centralized jonasAdmin console.

A domain is a set of JOnAS instances that are running under the same management authority. The instances within a domain can be standalone, distributed or gathered in a cluster as well. The instances are managed from an unique console deployed in a dedicated JOnAS instance named master, configured with the domain management enabled.

Domain management is composed of the following features:

- discovery service for detecting automatically the instances and the clusters.

- JOnAS instance and cluster monitoring for maintaining a JOnAS instance state view and a cluster view as well as for tracking some indicators.

- JOnAS instance control for remote starting or remote stopping of the JOnAS instances.

- domain-wide deployment for deploying Java EE modules across a set of JOnAS instances or clusters.

- instance configuration for setting or retrieving a configuration attribute from a JOnAS instance as available through a local jonasAdmin console.

Several interfaces are provided:

- console with the centralized jonasAdmin console.

- command with the jonas admin command which has been extended to deal with domain.

- JMX through some domain management MBeans.

The domain management is optional. When enabled, JOnAS instance within a domain must have an uniq name instance.

The domain management relies on:

- MBeans objects deployed in each JOnAS instance and accessible through the JMX remote interface.

- One or several management JOnAS instances masters hosting the domain management features.

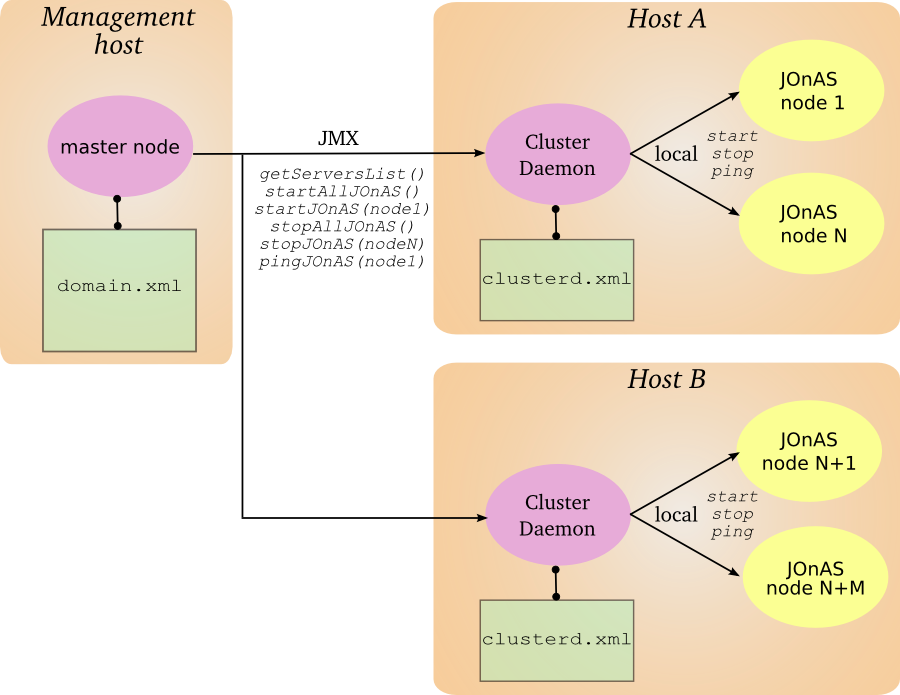

- Optionaly some cluster daemons enabling to control remotely the JOnAS instances.

|

A dedicated JOnAS is assigned with the master role which enables the domain management feature. The jonasAdmin console is deployed in this instance and is no more necessary in the others ones.

The domain management functions are exposed through a set of MBeans that are used by the jonasAdmin console.

The master instance interacts with the managed JOnAS instances and the cluster daemons through JMX. The master node periodically polls the managed instances for providing states and statistics.

Several master instances can be defined for high availability, only one must be enabled at one point.

The cluster daemon is in charge of controlling the JOnAS instances (start/stop) that are collocated on the same machine. It is an optional component in the domain management infrastructure and can be added on an existing configuration.

It is accessible through a JMX Remote interface and provides the start/stop operations. By default, it relies on the native JOnAS command for driving the JOnAS instances. The user may put its own commands for setting a particular environment before starting/stopping a JOnAS node.

The managed JOnAS instances must respect some conventions for domain management:

- the managed instance must be aware of the domain.

- the managed instance must have an unique name in the domain.

Both domain name and instance name are specified in the starting command:

jonas start -n instance_name -Ddomain.name=domain_name.

The discovery service aims at detecting when a new JOnAS instance appears or when a JOnAS instance leaves the domain. It enables the master instance to retrieve the managed instances without any prior informations about them.

Two implementations of the discovery service are available (the choice is done by configuration):

- a IP multicast based implementation.

- a JGroups based implementation. The group communication protocol stack can be configured on top of UDP or TCP.

The first one relies on IP multicast whereas the second one uses point to point communication.

If the managed instances cannot be discovered automatically due to the network configuration or the administration policies, the domain topology can be described statically in the domain.xml file.

JOnAS provides different administration interfaces:

- a graphical console with jonasAdmin console that can be centralized if deployed in the master instance.

- a

jonas admincommand has been extended for supporting the domain management feature (remote control, cluster-wide deployment and so on. - a JMX interface through the JOnAS MBeans.

- a EJB interface through the mejb.

- a Web Service interface through the mejb.

For the management point of view, a cluster is a group of JOnAS instances. The JOnAS instances within a cluster are called cluster members. A JOnAS instance may be a member of several clusters in the domain.

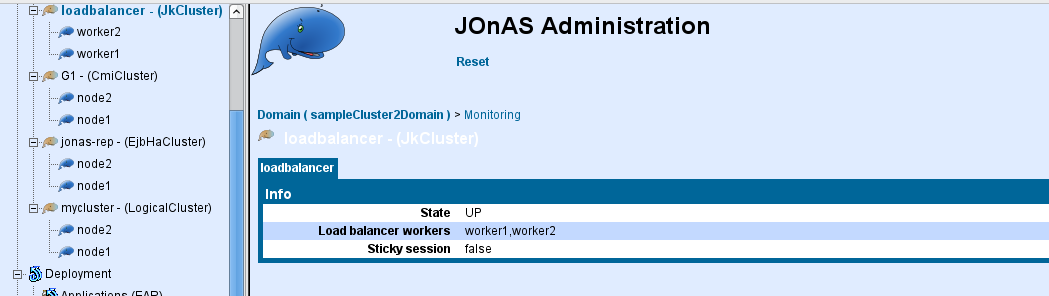

A cluster is an administration target in the domain: from the common administration point of view, the administrator may monitor the cluster or apply to it management operations like deploy or undeploy of applications.

There are two main cluster categories:

-

Clusters containing instances that are grouped together only to facilitate management tasks.

-

Clusters containing instances that are grouped together to achieve objectives like scalability, high availability or failover.

The clusters in the first category, called Logical Cluster, are created by the domain administrator based on his/her particular needs. The grouping of servers (the cluster creation) can be done even though the servers are running.

In the second case, the servers which compose a cluster must have a particular configuration that allows them to achieve the expected objectives. Once servers are started, the management domain feature is able to automatically detect that they are cluster instances, based on configuration criteria and MBeans discovery. Several cluster types are supported by the domain management function. They correspond to the different roles a cluster can play:

-

JkCluster - allow HTTP request load balancing and failover based on the mod_jk Apache connector.

-

TomcatCluster - allow high availability at web level based on the Tomcat HTTP session replication solution.

-

CmiCluster - enable JNDI clustering and allows load balancing at EJB level, based on the CMI protocol

-

JoramCluster - allow JMS destinations scalability based on the JORAM distributed solution.

-

JoramHa - allow JMS destinations high availability based on JORAM HA.

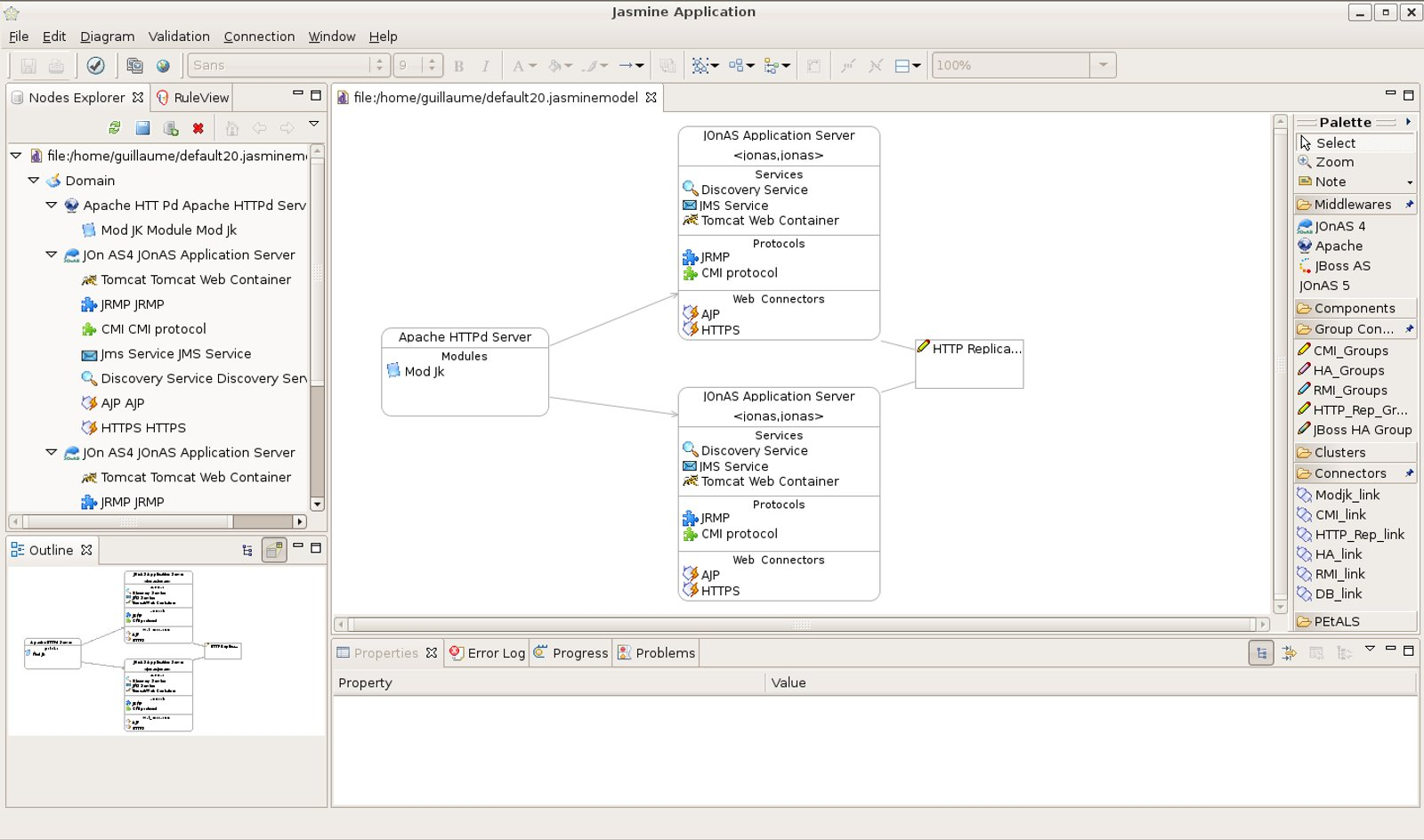

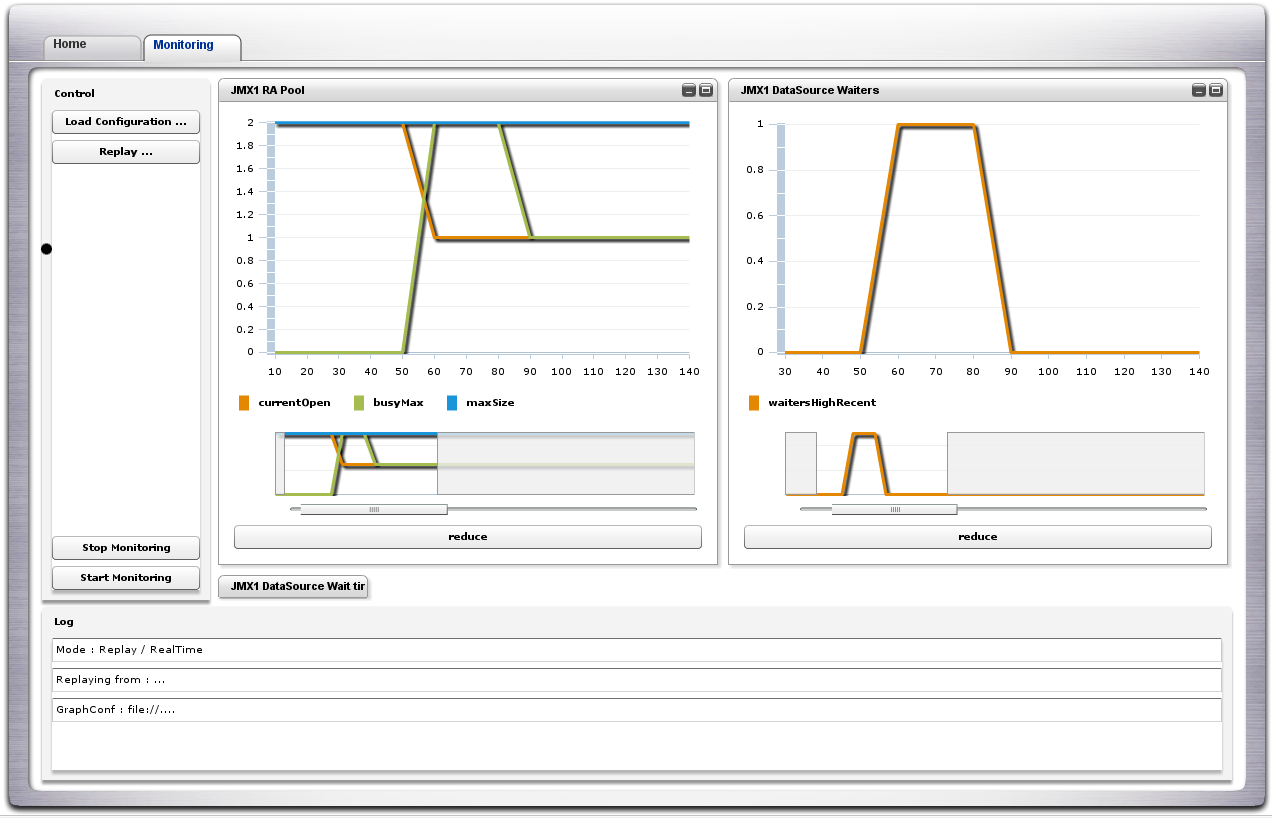

Advanced administration features are provided through the JASMINe project:

- JASMINe Design for building a cluster configuration through a graphical interface.

- JASMINe Deploy both for deploying the middleware and the applications across a distributed infrastructure.

- JASMINe Monitoring for helping the operator to detect errors and for tracking the performance.

- JASMINe SelfManagement for improving the system reliability and performance automatically.

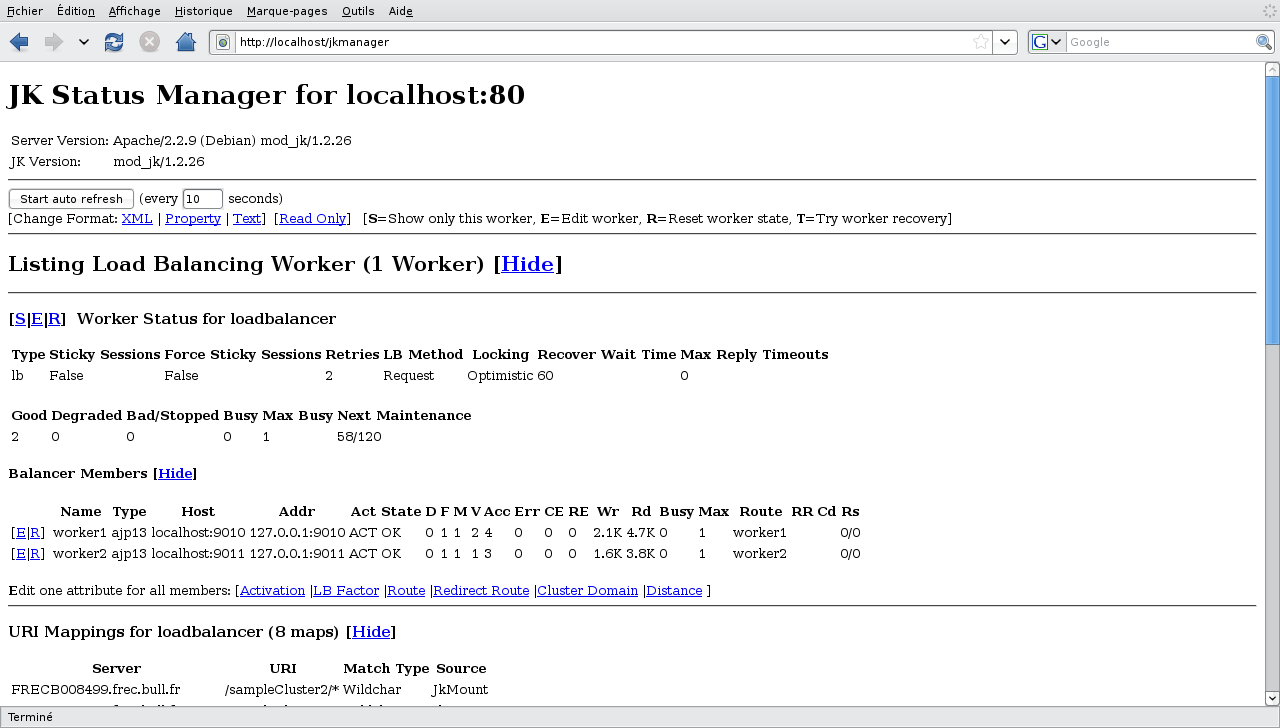

As with other Apache modules, mod_jk should be first

installed on the modules directory of the Apache Web

Server and the

httpd.conf

file has to be updated. Moreover, mod_jk requires

workers.properties

file that describes the host(s) and port(s) used by the

workers.

Here we provide an example of

workers.properties

file to connect the Apache frontal with two

workers. The file defines a

load-balancing

worker named

myloadbalancer

, and the two

balanced

workers,

worker1

and

worker2

. Each cluster member will be configured to play the

role of one of the balanced workers. Additionally, a

status worker jkstatus is defined for controlling

and monitoring the load-balancing.

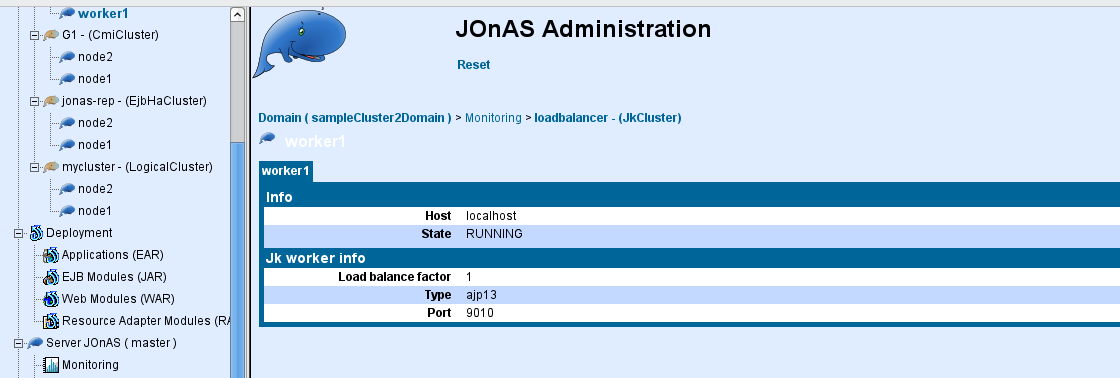

Example 3.1. Configuring mod_jk workers

#-----------------------

# List the workers name

#-----------------------

worker.list=myloadbalancer,jkstatus

#-----------------------

# worker1

#-----------------------

worker.worker1.port=9010

worker.worker1.host=localhost

worker.worker1.type=ajp13 # Load balance factor

worker.worker1.lbfactor=1 # Define preferred failover node for worker1

#worker.worker1.redirect=worker2 # Disable worker1 for all requests except failover

#worker.worker1.disabled=True

#-----------------------

# worker2

#-----------------------

worker.worker2.port=9011

worker.worker2.host=localhost

worker.worker2.type=ajp13 # Load balance factor

worker.worker2.lbfactor=1 # Define preferred failover node for worker2

#worker.worker2.redirect=worker2 # Disable worker2 for all requests except failover

#worker.worker2.disabled=True

#-----------------------

# Load Balancer worker

#-----------------------

worker.myloadbalancer.type=lb

worker.myloadbalancer.balance_workers=worker1,worker2

worker.myloadbalancer.sticky_session=false

#-----------------------

# jkstatus worker

#-----------------------

worker.jkstatus.type=status

For a complete documentation about

workers.properties

see the Apache Tomcat Connector

guide

.

The Apache configuration must be enhanced for loading the mod_jk plugin with its setting. The following lines

have to be added to the httpd.conf file directly or included from another file:

Example 3.2. Configuring mod_jk mount points

# Load mod_jk module

# Update this path to match your modules location

LoadModule jk_module modules/mod_jk.so

# Location of the workers.properties file

# Update this path to match your conf directory location JkWorkersFile

# (put workers.properties next to httpd.conf)

/etc/httpd/conf/workers.properties

# Location of the log file JkLogFile /var/log/mod_jk.log

# Log level : debug, info, error or emerg

JkLogLevel info

# Select the timestamp log format

JkLogStampFormat "[%a %b %d %H:%M:%S %Y] "

# Shared Memory Filename ( Only for Unix platform ) required by loadbalancer

JkShmFile /var/log/jk.shm

# Assign specific URL to the workers

JkMount /sampleCluster2 myloadbalancer

JkMount /sampleCluster2/* myloadbalancer

JkMount /sampleCluster3 myloadbalancer

JkMount /sampleCluster3/* myloadbalancer

# A mount point to the status worker

JkMount /jkmanager jkstatus

JkMount /jkmanager/* jkstatus

# Copy mount points into all virtual hosts

JkMountCopy All

# Enable the Jk manager access only from localhost

<Location /jkmanager/>

JkMount jkstatus

Order deny,allow

Deny from all

Allow from 127.0.0.1

</Location>

The location of the workers.properties file has to be adapted to your environment.

The JkMount directives specify the routes that are managed by mod_jk. The examples of

context urls pattern have to be replaced with your application ones.

For a complete documentation see Apache

HowTo

.

Each cluster member needs an AJP/1.3 connector listening on the

port defined in the workers.properties file. Moreover,

the worker name (here in the example, worker1/worker2) must be used as

value for the Engine's

jvmRoute

attribute.

Here is a chunk of tomcat6-server.xml configurations file for

the member worker1:

Example 3.3. Configuring an AJP connector in Tomcat

<Server>

<!-- Define the Tomcat Stand-Alone Service -->

<Service name="Tomcat-JOnAS">

<!-- Define a non-SSL Coyote HTTP/1.1 Connector on port 9000 -->

<Connector port="9000" protocol="HTTP/1.1" connectionTimeout="20000"

redirectPort="9043" />

<!-- AJP 1.3 Connector on port 9010 for worker.worker1.port in workers.properties file -->

<Connector port="9010" redirectPort="9043" protocol="AJP/1.3"/>

<!-- An Engine represents the entry point You should set jvmRoute to support load-balancing via AJP ie : -->

<Engine name="jonas" defaultHost="localhost" jvmRoute="worker1">

</Engine>

</Service>

<Server>

Contrary to mod_jk, mod_proxy_balancer supports HTTP and AJP protocols. Thus the cluster member can

specify either an AJP/1.3 connector or a HTTP connector. In both case, the connector port number must be the same than the port number

defined in the Apache's configuration file.

Moreover, the BalancerMember route parameter must be used as value for the Engine's

jvmRoute

attribute.

The load-balancing is configured as a WEB farm, see Section 3.1.1, “Configuring a WEB farm with mod_jk” and Section 3.1.2, “Configuring a WEB farm with mod_proxy_balancer”.

Additionally to HTTP requests load balancing provided by mod_jk, transparent failover for Web applications can be reached by using HTTP session replication provided by the Tomcat clustering solution.

Web cluster members are JOnAS instances having the web container service activated, using the Tomcat implementation, and having a specific configuration which allows them to be members of a Tomcat cluster.

The concerned configuration file is the

tomcat6-server.xml

file. Every member of the cluster must have a Cluster

element defined in the default virtual host definition.

The cluster name is defined by the clusterName

attribute, which should be the same for all the cluster

members. Another common element for the cluster members

is the Membership definition.

The example below defines a configuration of the Cluster element for an all-to-all session replication with the DeltaManager.

This works great for small cluster. For larger cluster, BackupManager enables to replicate the session data to one backup node,

and only to nodes that have the application deployed. See the

documentation

for more informations.

Example 3.4. Configuring the HTTP session replication in Tomcat

<!-- Define a Cluster element -->

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8" clustername="mycluster" >

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="auto"

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

![[Note]](../../resources/images/note.png) |

Note |

|---|---|

|

the

clusterName

attribute is mandatory for administration purpose (and not set by default

in

|

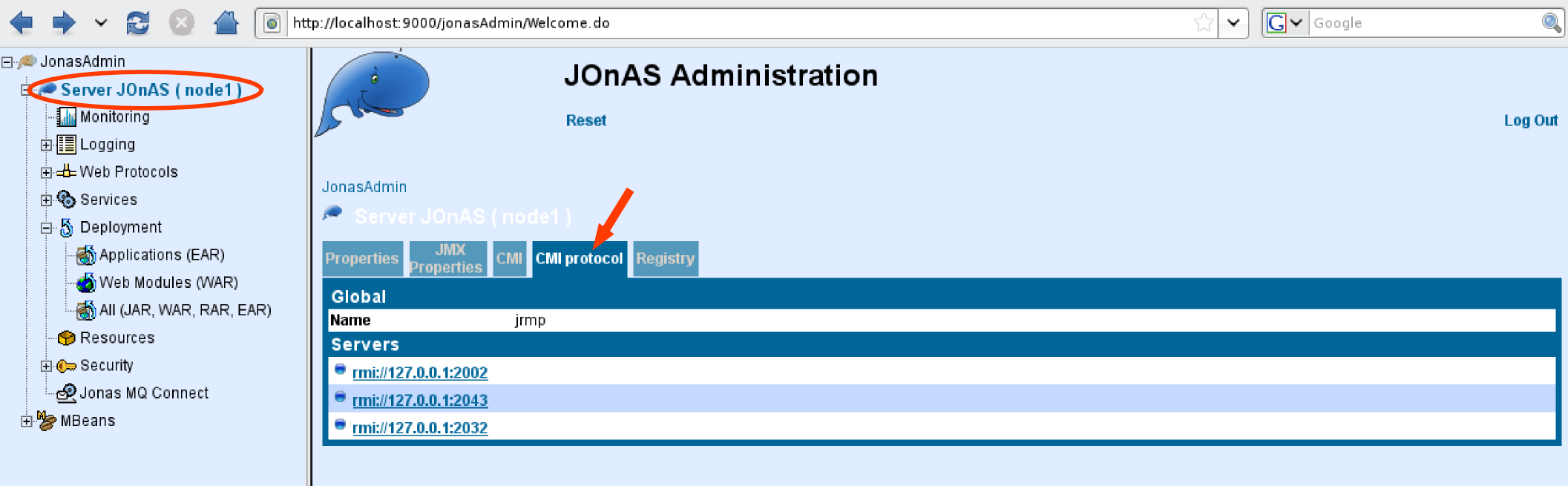

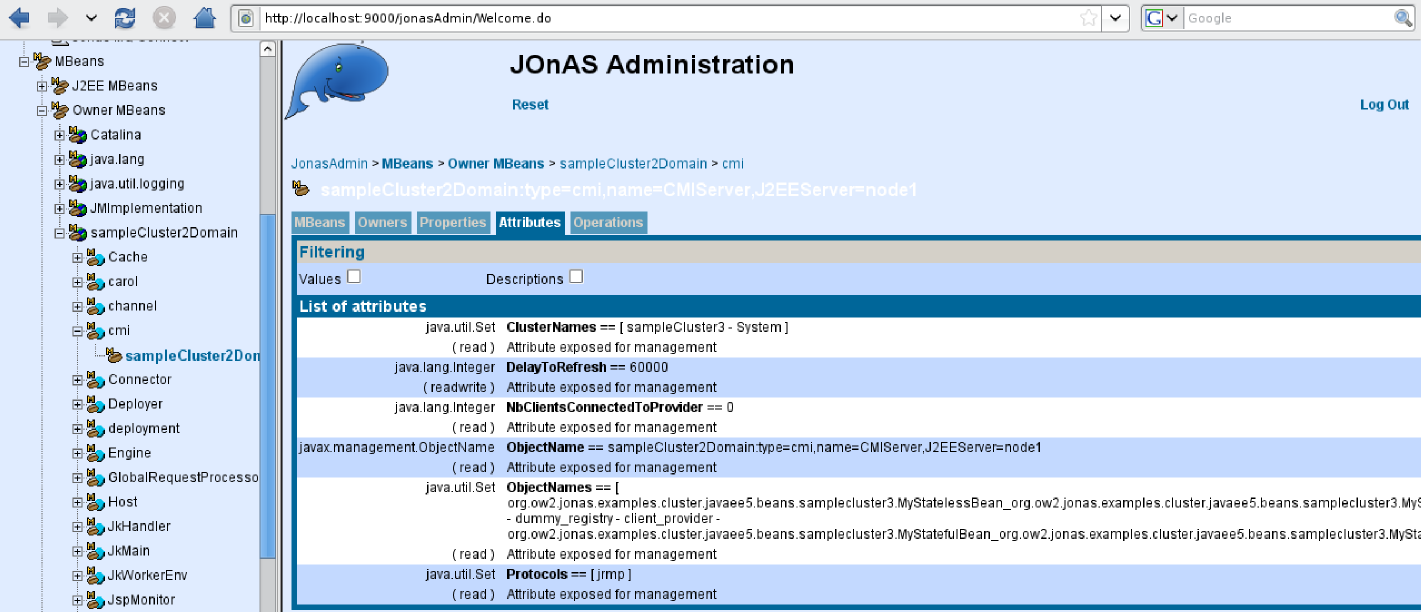

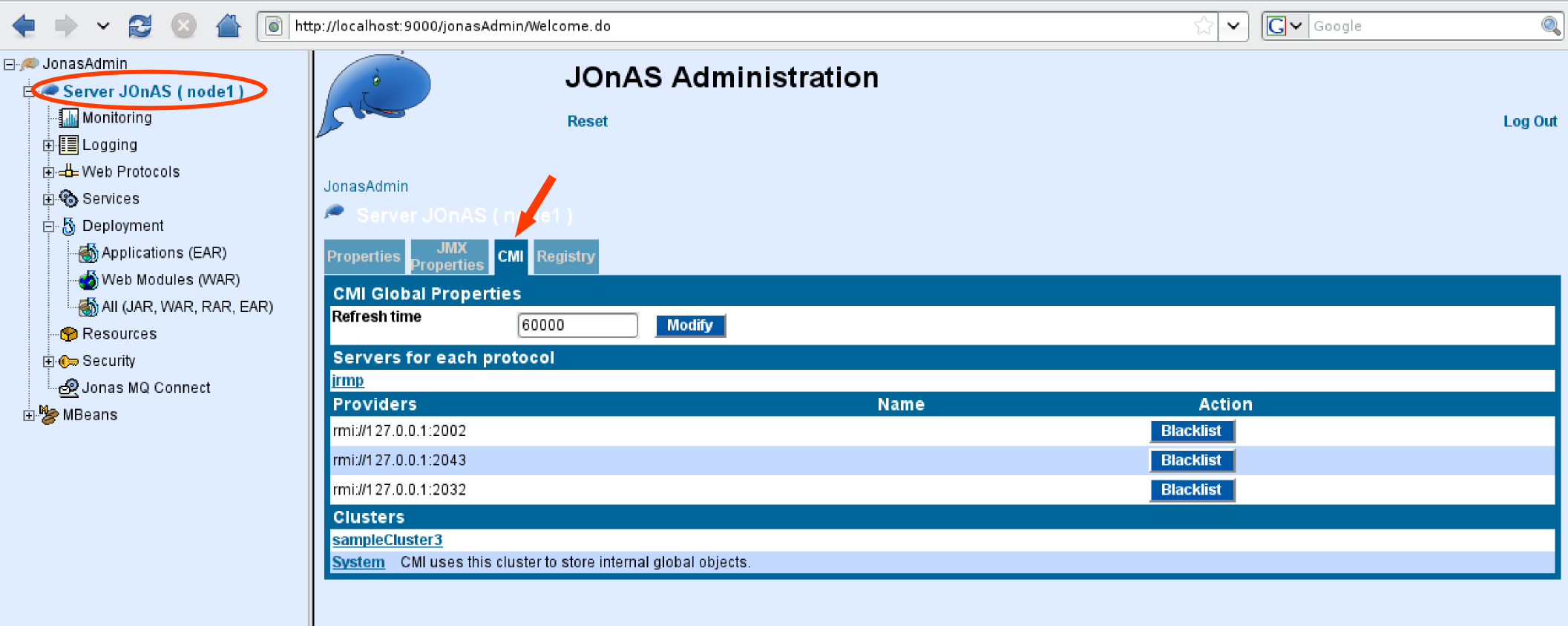

CMI and HA service do provide the following clustering features:

JNDI high availability through the registry replication and the multi-target lookup

-

EJB load-balancing and fail-over through the CMI cluster proxy

for the EJB2.1 home interface (SSB, SFSB, EB)

for the EJB2.1 remote interface (SSB)

for the EJB3 (SSB, SFSB)

-

EJB high availability with the HA service

for the EJB2.1 SFSB

EJB3 support being under development

|

CMI is an OW2 project providing a framework to define and configure clusters of RMI objects. CMI is embedded both in JOnAS and EasyBeans projects.

The main features are :

Support of EJB2 and EJB3

Definition of the cluster logic with simple POJO (policy and strategy)

Delivery of a set of predefined policies (round robin, first available, random, ...) and strategies (weighted, local preference, ...)

Dynamic update of the policy and strategy from the server side

Smooth shutdown of a cluster member

JMX management

Separation of the control flow and the service flow

multi-protocols support: jrmp, irmi, iiop

![[Note]](../../resources/images/note.png) |

Note |

|---|---|

|

CMI described in this section is CMI v2 available in JOnAS 5 (not in JOnAS 4). |

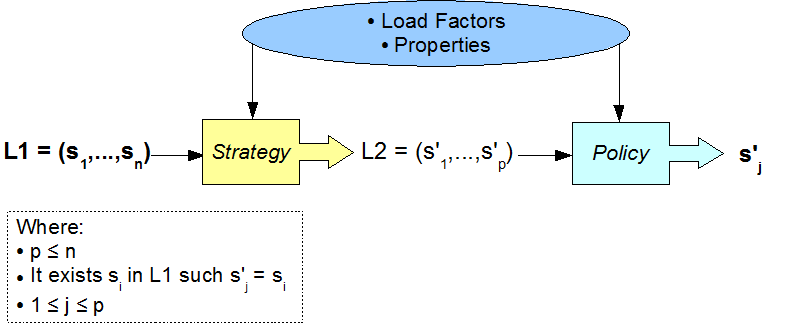

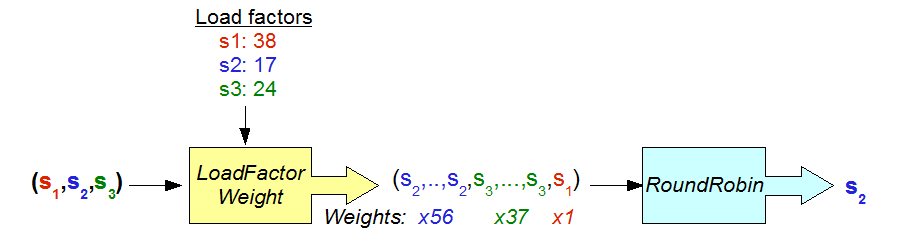

The following figure shows how to define a weighted round-robin in term of policy and strategy.

|

An use of the weighted round-robin

HA service provides High Availability for the EJB. A first implementation based on the Horizontal replication is delivered for EJB2.1. Other solutions are under development: Terracotta based and pair replication.

Stateful session beans (EJB2.1) can be replicated since JOnAS 4.7 in order to provide high availability in the case of failures in clustered environments. A new service called High Availability (HA) has been included in JOnAS to provide replication mechanisms. JOnAS HA also requires the cluster method invocation (CMI) protocol.

From JOnAS 4.8, a new replication algorithm based on a horizontal replication approach is available. The algorithm improves the algorithm implemented for JOnAS 4.7 with the following enhancements:

Replication of SFSBs with references to EBs: The algorithm can replicate SFSBs that reference EB by means of both, local or remote interfaces.

Transaction awareness: The algorithm is transaction aware, meaning that the state is not replicated if the transaction aborts.

Exactly-once semantics: Each transaction is committed exactly once at the DB if the client does not fail. If the client fails, each transaction is committed at most once at the DB

JOnAS implements an update-everywhere replication protocol according to the database replication terminology (See the J. Gray et al.'s paper ''The dangers of replication and a solution'' in proceedings of the ACM SIGMOD 96's conference, Canada). In this protocol, a client can connect to any server. When the client calls the create() method on the SFSB's Home interface, the server the client connects to is selected following a round-robin scheme. All the requests from the client to the SFSB will be processed by this server until the client calls the remove() method on the remote interface. The rest of the servers will act as backups for that client. Before sending the response to the client, the SFSB's state is sent to the backups.

If the server fails, another server among the backups will be selected to serve the client requests, first restoring the current state of the SFSBs from the state information stored in the HA local service. From this point on, this server will receive the new client requests.

The supported replication scenarios are shown in the following figure:

|

The horizontal approach aims to guarantee that the transactions are kept consistent when a fail-over occurs. They are either aborted or restored for ensuring the exactly-once semantics. During a fail-over, the new primary uses a special table in the database for storing the transaction identifier and enabling to find out if the transaction was committed or not.

If the transaction is aborted due to the primary failure, then the new primary will not find the transaction identifier in the special table. The request will be replayed.

If the transaction is committed, then the new primary will find the transaction identifier, which means that the transaction was committed. The request won't be replayed; the replicated result is returned.

Beyond the SFSB replication, the algorithm enables the building of applications (stateful or stateless) with a high level of reliability and integrity.

The setting of an EJB farm is achieved by

- configuring the CMI service

- configuring the registry distribution

- configuring the EJB application

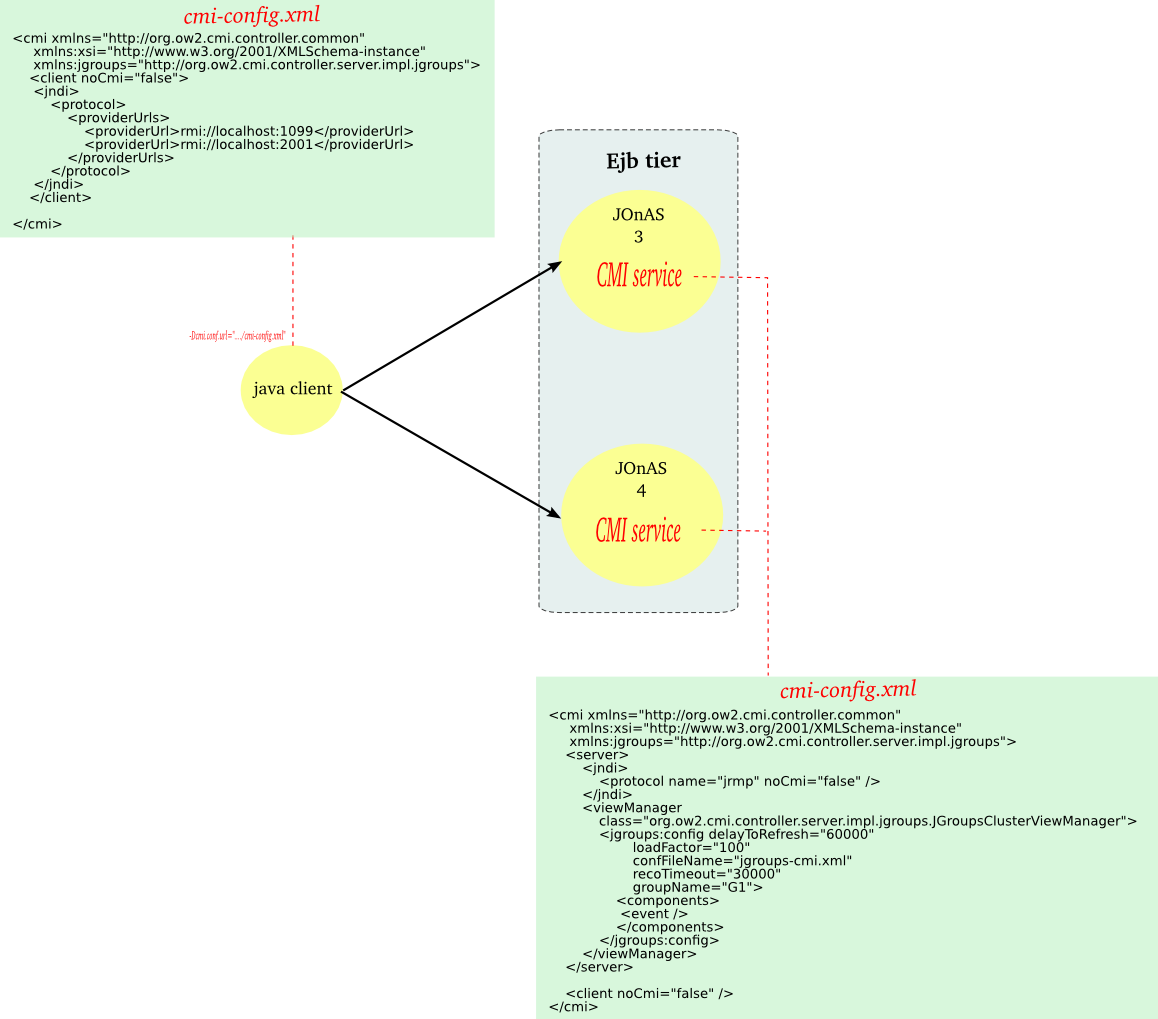

The configuration of the cmi service

is available through the file

$JONAS_BASE/conf/cmi-config.xml.

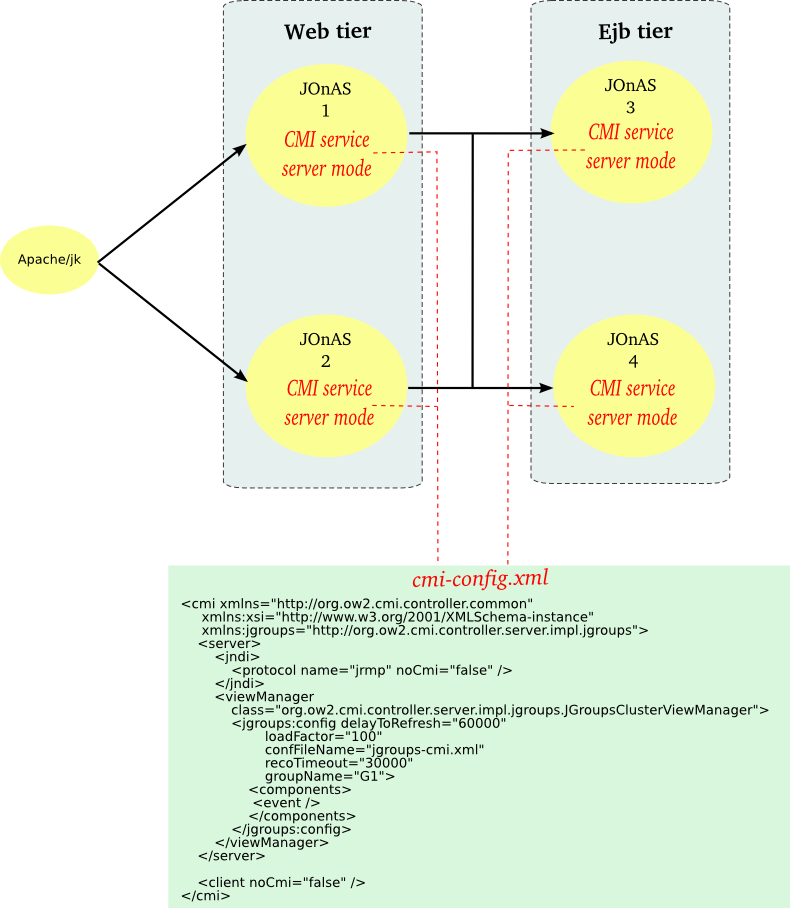

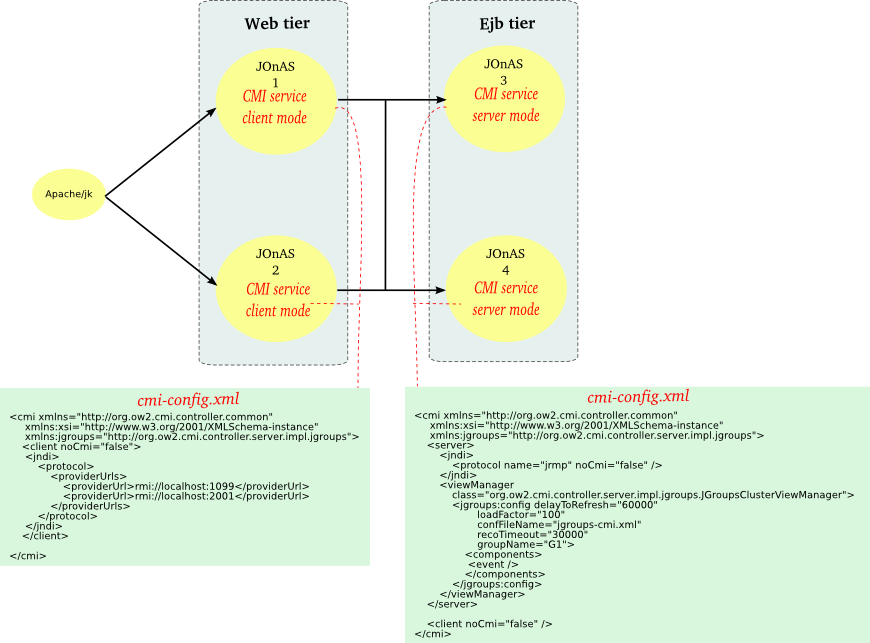

The CMI service can be configured in two modes:

-

server mode with a cluster view manager created locally, i.e. with a local instance of a replicated CMI registry.

-

client mode without a local cluster view manager, in this case a list of providers urls (i.e. a list of cluster view manager urls) is given for accessing to the remote CMI registries.

The server mode is simpler to configure, the client mode requires to define statically a list of providers urls. The server mode starts a Group Communication Protocol instance (e.g. JGroups) and thus increases the resources consumption compare to the client mode.

![[Note]](../../resources/images/note.png) |

Note |

|---|---|

|

The CMI configuration file may contain two parts: a

|

The server element contains the following elements:

Example 3.5. Configuring the cmi service in the server mode

<cmi xmlns="http://org.ow2.cmi.controller.common"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:jgroups="http://org.ow2.cmi.controller.server.impl.jgroups">

<server>

<jndi>  <protocol name="jrmp" noCmi="false" />

</jndi>

<viewManager

<protocol name="jrmp" noCmi="false" />

</jndi>

<viewManager  class="org.ow2.cmi.controller.server.impl.jgroups.JGroupsClusterViewManager">

class="org.ow2.cmi.controller.server.impl.jgroups.JGroupsClusterViewManager">  <jgroups:config

<jgroups:config  delayToRefresh="60000"

delayToRefresh="60000"  loadFactor="100"

loadFactor="100"  confFileName="jgroups-cmi.xml"

confFileName="jgroups-cmi.xml"  recoTimeout="30000"

recoTimeout="30000"  groupName="G1">

groupName="G1">  <components>

<components>  <event />

</components>

</jgroups:config>

</viewManager>

</server>

</cmi>

<event />

</components>

</jgroups:config>

</viewManager>

</server>

</cmi>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

![[Note]](../../resources/images/note.png) |

Note |

|---|---|

|

Refer to the clustering guide for issues related to JGroups. |

The client element contains the following elements:

Example 3.6. Configuring the cmi service in the client mode

<cmi xmlns="http://org.ow2.cmi.controller.common"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<client noCmi="false">  <jndi>

<jndi>  <protocol name="jrmp">

<providerUrls>

<providerUrl>rmi://localhost:1099</providerUrl>

<providerUrl>rmi://localhost:2001</providerUrl>

</providerUrls>

</protocol>

</jndi>

</client>

</cmi>

<protocol name="jrmp">

<providerUrls>

<providerUrl>rmi://localhost:1099</providerUrl>

<providerUrl>rmi://localhost:2001</providerUrl>

</providerUrls>

</protocol>

</jndi>

</client>

</cmi>

|

|

|

|

|

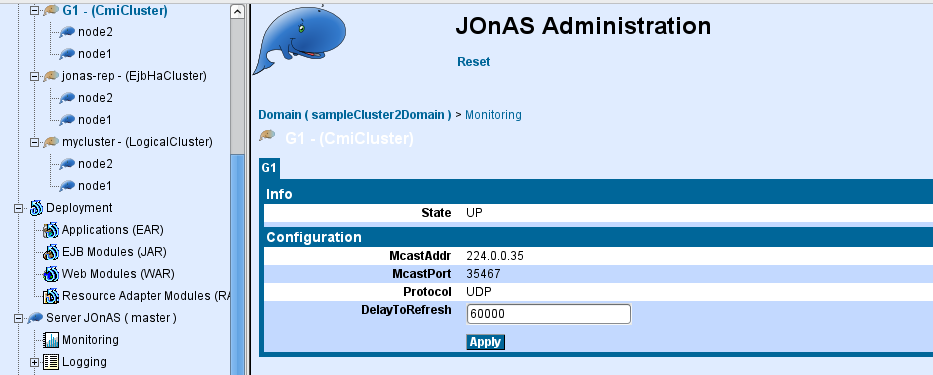

By default, CMI relies on JGroups group-communication protocol for ensuring the global registry distribution. The parameters are gathered in the:

$JONAS_BASE/conf/cmi-config.xml for specifying the JGroups configuration file name and the JGroups group name.

$JONAS_BASE/conf/jgroups-cmi.xml file for the settings of the jgroups protocol stack. By default, the JGroups configuration uses the UDP protocol and the multicast IP for broadcasting the registry updates. A TCP-based stack can be used in a network environment that does not allow the use of multicast IP or when a cluster is distributed over a WAN.

|

For example, the jgroups-cmi.xml file may contain the following stack configuration:

Example 3.7. JGroups's configuration stack

<config>

<UDP mcast_addr="224.0.0.35"

mcast_port="35467"

tos="8"

ucast_recv_buf_size="20000000"

ucast_send_buf_size="640000"

mcast_recv_buf_size="25000000"

mcast_send_buf_size="640000"

loopback="false"

discard_incompatible_packets="true"

max_bundle_size="64000"

max_bundle_timeout="30"

use_incoming_packet_handler="true"

ip_ttl="2"

enable_bundling="true"

enable_diagnostics="true"

thread_naming_pattern="cl"

use_concurrent_stack="true"

thread_pool.enabled="true"

thread_pool.min_threads="1"

thread_pool.max_threads="25"

thread_pool.keep_alive_time="5000"

thread_pool.queue_enabled="false"

thread_pool.queue_max_size="100"

thread_pool.rejection_policy="Run"

oob_thread_pool.enabled="true"

oob_thread_pool.min_threads="1"

oob_thread_pool.max_threads="8"

oob_thread_pool.keep_alive_time="5000"

oob_thread_pool.queue_enabled="false"

oob_thread_pool.queue_max_size="100"

oob_thread_pool.rejection_policy="Run"/>

<PING timeout="2000"

num_initial_members="3"/>

<MERGE2 max_interval="30000"

min_interval="10000"/>

<FD_SOCK/>

<FD timeout="2000" max_tries="3" shun="true"/>

<VERIFY_SUSPECT timeout="1500" />

<BARRIER />

<pbcast.NAKACK max_xmit_size="60000"

use_mcast_xmit="false" gc_lag="0"

retransmit_timeout="300,600,1200,2400,4800"

discard_delivered_msgs="true"/>

<UNICAST timeout="300,600,1200,2400,3600"/>

<pbcast.STABLE stability_delay="1000" desired_avg_gossip="50000"

max_bytes="400000"/>

<VIEW_SYNC avg_send_interval="60000" />

<pbcast.GMS print_local_addr="true" join_timeout="3000"

join_retry_timeout="2000" shun="true" />

<SEQUENCER/>

<FC max_credits="20000000"

min_threshold="0.10"/>

<!--pbcast.STREAMING_STATE_TRANSFER use_reading_thread="true"/-->

<pbcast.STATE_TRANSFER />

<!-- pbcast.FLUSH /-->

</config>

![[Note]](../../resources/images/note.png) |

Note |

|---|---|

| You can find more information about JGroups and about the stack configuration here. |

All the members of a cluster share the same JGroups configuration.

If several cluster partitions are required over a single LAN, several JGroups configurations must be configured with different values for the following parameters:

JGroups group name

JGroups multicast address

JGroups multicast port

When a new node appears in the cluster, its registry content is synchronized automatically.

When a node disappears, JGroups notifies the other's member of the node leaving and the registry entries related to this node are removed.

Informations must be put in the EJB meta-datas for clustering them. The following section gives a description of the clustering parameters of the EJBs and indicates how to configure the load-balancing algorithm. Afterwards, the different settings for EJB2 and EJB3 (specific deployment descriptor and/or annotation) are described.

EJB meta-data for clustering contains the following parameters:

| Parameter | Description |

| name | specifies the cluster name associated with the EJB. This information is set for administration

purpose. Default name is defaultCluster. |

| pool | describes the stubs pool configuration at the client side (one pool per EJB).

|

| policy | specifies the load-balancing algorithm policy (POJO) used for the EJB. Built-in policies are provided. The user can provide its own implementation. |

| strategy | specifies the load-balancing algorithm strategy (POJO) used for the EJB. Built-in strategies are provided. The user can provide its own implementation. |

| properties | Set of properties that may be passed to the policy and/or strategy. The parameter is optional and is reserved for an advanced use. The parameter is not used by the built-in policies and strategies and may be read by the user policies and/or strategies. |

| replicated | boolean indicating whether the ejb is replicated or not. Not applicable in farming. |

The following policies implementations are provided with JOnAS:

| Policy | Description |

| RoundRobin.class | round-robin algorithm, all the node are served sequentially. |

| Random.class | random algorithm, a node is chosen randomly. |

| FirstAvailable.class | first-available algorithm, at first a server is selected randomly and then is bound. |

| HASingleton.class | HA-singleton algorithm, all the clients are bound to the same server. |

The policies can be pointed out with some strategies:

| Strategy | Description | Applicable to policy |

| NoStrategy.class | no strategy, set by default. | All |

| LocalPreference.class | local preference, if present, a collocated server is selected. | RoundRobin, Random, FirstAvailable |

| LoadFactorWeight.class | add a weighted load factor to the policy, for example for specifying the weighted round-robin algorithm. | RoundRobin |

| LoadFactorSort.class | add a sorted load factor to the policy, for example for specifying the sorted round-robin algorithm. | RoundRobin |

CMI permits to provide its own policy and strategy. The POJO must implement CMI interfaces and the classes must be deployed across the cluster.

The policy must implement the org.ow2.cmi.lb.policy.IPolicy interface:

package org.ow2.cmi.lb.policy;

import java.lang.reflect.Method;

import java.util.Collection;

import org.ow2.cmi.lb.LoadBalanceable;

import org.ow2.cmi.lb.NoLoadBalanceableException;

import org.ow2.cmi.lb.decision.DecisionManager;

import org.ow2.cmi.lb.strategy.IStrategy;

/**

* Interface of the policies for load-balancing.

* @param <T> The type of object that was load-balanced

* @author The new CMI team

*/

public interface IPolicy<T extends LoadBalanceable> {

/**

* Chooses a load-balanceable among the list of load-balanceables.

* @param loadBalanceables a list of load-balanceables

* @throws NoLoadBalanceableException if no server is available

* @return the chosen load-balanceable

*/

T choose(Collection<T> loadBalanceables) throws NoLoadBalanceableException;

/**

* Return a strategy to modify the behavior of this policy.

* @return a strategy to modify the behavior of this policy

*/

IStrategy<T> getStrategy();

/**

* Sets a strategy to modify the behavior of this policy.

* @param strategy a strategy of load-balancing

*/

void setStrategy(IStrategy<T> strategy);

/********************** Begin of callback definitions **********************/

/**

* Returns a decision when an exception is thrown during an access to a registry

* for a given load-balanceable.

* @param loadBalanceable the load-balanceable that have caused the exception

* @param thr the exception that is thrown

* @return the decision when an exception is thrown during an access to a registry

* for a given load-balanceable

*/

DecisionManager<Void> onLookupException(T loadBalanceable, Throwable thr);

/**

* Returns a decision when an exception is thrown during an invocation for a given

* load-balanceable.

* @param method the method that was invoked

* @param parameters the parameters of the method

* @param loadBalanceable the load-balanceable that have caused the exception

* @param thr the exception that is thrown

* @return the decision when an exception is thrown during an invocation for a given

* load-balanceable

*/

DecisionManager<Void> onInvokeException(Method method, Object[] parameters, T loadBalanceable, Throwable thr);

/**

* Return a decision when a server is chosen and its delegate retrieved.

* @param <ReturnType> the type of delegate

* @param method the method that was invoked

* @param parameters the parameters of the method

* @param chosenValue the delegate of chosen server

* @return the decision when the server is chosen and its delegate retrieved

*/

<ReturnType> DecisionManager<ReturnType> onChoose(Method method, Object[] parameters, ReturnType chosenValue);

/**

* Returns a decision when the invocation of a remote method ends.

* @param <ReturnType> the type of the returned value

* @param method the method that was invoked

* @param parameters the parameters of the method

* @param loadBalanceable the load-balanceable used for the invocation

* @param retVal the returned value

* @return the decision when the invocation of a remote method ends

*/

<ReturnType> DecisionManager<ReturnType> onReturn(Method method, Object[] parameters, T loadBalanceable, ReturnType retVal);

}

CMI provides the org.ow2.cmi.lb.policy.AbstractPolicy abstract class for simplifying

the policies implementation. The user class can extend it and provides, at least, an implementation of the choose

method. Example for the round-robin implementation:

Example 3.8. Round-robin policy in CMI

package org.ow2.cmi.lb.policy;

import java.util.ArrayList;

import java.util.Collection;

import java.util.List;

import java.util.Random;

import net.jcip.annotations.ThreadSafe;

import org.ow2.cmi.lb.LoadBalanceable;

import org.ow2.cmi.lb.NoLoadBalanceableException;

import org.ow2.cmi.lb.strategy.IStrategy;

import org.ow2.cmi.lb.strategy.NoStrategy;

import org.ow2.util.log.Log;

import org.ow2.util.log.LogFactory;

/**

* The default load-balancing policy (Round Robin) that always chooses the next available load-balanceable.

* @param <T> The type of objects that are load-balanced

* @author The new CMI team

*/

@ThreadSafe

public final class RoundRobin<T extends LoadBalanceable> extends AbstractPolicy<T> {

/**

* Logger.

*/

private static final Log LOGGER = LogFactory.getLog(RoundRobin.class);

/**

* Initial value of the pointer.

*/

private static final int INITIAL_VALUE = -1;

/**

* The pointer to store the last ref.

*/

private int pointer;

/**

* Random numbers.

*/

private final Random rand = new Random();

/**

* Build the Round Robin policy.

* Give to the pointer an initial value.

*/

public RoundRobin() {

pointer = INITIAL_VALUE;

}

/**

* Chooses the next load-balanceable among the list of load-balanceables.

* @param loadBalanceables the list of load-balanceables

* @throws NoLoadBalanceableException if no server available

* @return the chosen load-balanceable

*/

@Override

public synchronized T choose(final Collection<T> loadBalanceables) throws NoLoadBalanceableException{

if (loadBalanceables == null || loadBalanceables.isEmpty()) {

LOGGER.error("The given list is null or empty: " + loadBalanceables);

throw new NoLoadBalanceableException("The given list is null or empty: " + loadBalanceables);

}

List<T> cmiRefsWithStrategy;

IStrategy<T> strategy = getStrategy();

if(strategy != null) {

cmiRefsWithStrategy = strategy.choose(loadBalanceables);

// If no server corresponds at this strategy, we don't use it

if(cmiRefsWithStrategy.isEmpty()) {

cmiRefsWithStrategy = new ArrayList<T>(loadBalanceables);

}

} else {

cmiRefsWithStrategy = new ArrayList<T>(loadBalanceables);

}

int size = cmiRefsWithStrategy.size();

if(pointer == INITIAL_VALUE){

// The initial pointer depends on the strategy

if(strategy != null && !(strategy instanceof NoStrategy)) {

// Use the first element chosen by the strategy

pointer = 0;

} else {

// No strategy, choose randomly the first element

pointer = rand.nextInt(size);

}

} else {

// Perhaps some servers are disappeared, in this case the pointer can out of bounds

if(pointer >= size) {

pointer = INITIAL_VALUE;

}

// Choose the next target

pointer = (pointer + 1) % size;

}

return cmiRefsWithStrategy.get(pointer);

}

@Override

public String toString() {

return "RoundRobin[pointer: "

+ pointer + " - strategy: " + getStrategy() + "]";

}

}

The policy must implement the org.ow2.cmi.lb.strategy.IStrategy interface:

package org.ow2.cmi.lb.strategy;

import java.util.Collection;

import java.util.List;

import org.ow2.cmi.lb.LoadBalanceable;

/**

* Interface of the load-balancing strategies.

* A strategy allows to modify a list of load-balanceables before applying a policy to elect only one load-balanceable.

* @param <T> The type of object that was load-balanced

* @author The new CMI team

*/

public interface IStrategy<T extends LoadBalanceable> {

/**

* Returns a new list of load-balanceables by modifying the given list.

* @param loadBalanceables a list of load-balanceables

* @return a new list of load-balanceables by modifying the given list

*/

List<T> choose(Collection<T> loadBalanceables);

}

Example 3.9. Local preference strategy in CMI

Example for the local preference implementation:

package org.ow2.cmi.lb.strategy;

import java.net.InetAddress;

import java.net.NetworkInterface;

import java.net.SocketException;

import java.util.ArrayList;

import java.util.Collection;

import java.util.List;

import net.jcip.annotations.Immutable;

import org.ow2.cmi.controller.client.ClientClusterViewManager;

import org.ow2.cmi.controller.common.ClusterViewManager;

import org.ow2.cmi.controller.server.ServerClusterViewManager;

import org.ow2.cmi.lb.LoadBalanceable;

import org.ow2.cmi.reference.ServerRef;

import org.ow2.util.log.Log;

import org.ow2.util.log.LogFactory;

/**

* Defines a strategy that enable the local preference.

* @param <T> The type of objects that are load-balanced

* @author The new CMI team

*/

@Immutable

public final class LocalPreference<T extends LoadBalanceable> implements IStrategy<T> {

/**

* Logger.

*/

private static final Log LOGGER = LogFactory.getLog(LocalPreference.class);

/**

* The manager of the cluster view.

*/

private final ClusterViewManager clusterViewManager;

/**

* Constructs a strategy for load-factor.

* @param clusterViewManager the manager of the cluster view

*/

public LocalPreference(final ClusterViewManager clusterViewManager) {

this.clusterViewManager = clusterViewManager;

}

/**

* Returns a list of CMIReference that references the local servers.

* @param cmiRefs a list of CMIReference

* @return a list of CMIReference that references the local servers

*/

public List<T> choose(final Collection<T> cmiRefs) {

List<T> localServers = new ArrayList<T>();

for(T cmiRef : cmiRefs) {

// Gets the reference of server that have deployed the object

ServerRef serverRef = cmiRef.getServerRef();

// Gets its address

InetAddress inetAddress = serverRef.getInetAddress();

try {

// Checks if the addresses match

if(isLocal(inetAddress)) {

// Local address: adds reference in the first position

localServers.add(cmiRef);

}

} catch (SocketException e) {

LOGGER.error("Cannot know if is local or not", e);

throw new RuntimeException("Cannot know if is local or not", e);

}

}

return localServers;

}

/**

* Tests if an address is local.

* @param inetAddress an address

* @return true if the given address is local

* @throws SocketException if an I/O error occurs

*/

private boolean isLocal(final InetAddress inetAddress) throws SocketException {

if(clusterViewManager instanceof ClientClusterViewManager) {

if(NetworkInterface.getByInetAddress(inetAddress)!=null) {

return true;

}

} else if(clusterViewManager instanceof ServerClusterViewManager) {

if(inetAddress.equals(((ServerClusterViewManager) clusterViewManager).getInetAddress())) {

return true;

}

}

return false;

}

@Override

public String toString() {

return "LocalPreference";

}

}

User policy and/or strategy must be deployed across all nodes of the cluster. For example, you can :

- package the POJO in a jar file and put it in the

$JONAS_BASE/lib/extdirectory on each cluster member. - package the POJO in a jar file and put it in a repository and deploy it through a deployment plan.

Clustering meta-datas must be added in the deployment descriptor.

The JOnAS's deployment descriptor of an EJB 2.1 (session stateless, session stateful or entity) may contain the cluster-config element

with the following entries. Refer to Section 3.2.2.1.3.1, “Overview” for a precise information about the parameters.

| Element | Description |

| cluster-config/name | cluster name |

| cluster-config/pool | pool configuration with the following sub-elements

|

| cluster-config/policy | load-balancing policy |

| cluster-config/strategy | load-balancing strategy |

| cluster-config/properties | parameters for a customized user load-balancing algorithm.

Example:

<cluster-config>

...

<properties>

<simple-property name="prop1" value="val1" />

<simple-property name="prop2" value="38" />

<array-property name="prop3">

<value>true</value>

</array-property>

<array-property name="prop4">

<value>java.util.LinkedList</value>

<value>java.util.ArrayList</value>

</array-property>

<array-property name="prop5">

<value>http://carol.ow2.org</value>

</array-property>

</properties>

</cluster-config>

|

Example:

Example 3.10. EJB2.1 deployment descriptor for clustering

<jonas-ejb-jar xmlns="http://www.objectweb.org/jonas/ns"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.objectweb.org/jonas/ns

http://www.objectweb.org/jonas/ns/jonas-ejb-jar_5_1.xsd">

<jonas-session>

<ejb-name>MyEjb1SLR</ejb-name>

<jndi-name>MyEjb1Home</jndi-name>

<min-pool-size>3</min-pool-size>

<cluster-config>

<name>jonas-cluster</name>

<policy>org.ow2.cmi.lb.policy.RoundRobin</policy>

<strategy>org.ow2.cmi.lb.strategy.LocalPreference</strategy>

<pool>

<max-size>10</max-size>

<max-waiters>15</max-waiters>

<timeout>2000</timeout>

</pool>

</cluster-config>

</jonas-session>

</jonas-ejb-jar>

Clustering meta-datas can be added either through Java annotations or in the specific deployment descriptor.

CMI provides the following annotations (Refer to the Section 3.2.2.1.3.1, “Overview”section for more information).

| Annotation | Description |

| @Cluster | Set the cluster name and the pool stubs configuration.

Example:

@Cluster(name="test_cluster", pool=@Pool(max=2)) |

| @Policy | Set the load-balancing algorithm policy.

Example:

@Policy(RoundRobin.class) |

| @Strategy | Set the load-balancing algorithm strategy.

Example:

@Strategy(LocalPreference.class) |

| @Properties | Set properties for user policies and/or strategies.

Example:

@Properties(

simpleProperties={

@SimpleProperty(

name="prop1",

value="val1"),

@SimpleProperty(

name="prop2",

value="38")},

arrayProperties={

@ArrayProperty(

name="prop3"

values="true"),

@ArrayProperty(

name="prop4",

values={"java.util.LinkedList",

"java.util.ArrayList"}),

@ArrayProperty(

name="prop5",

values={"http://www.ow2.org"})})

|

The example below illustrates the use of the annotations for configuring a cluster named test_cluster.

The round-robin algorithm is set by default.

The complete code can be downloaded from the EasyBeans project

Example 3.11. EJB3 SSB with clustering annotations

package org.ow2.easybeans.examples.cluster;

import javax.ejb.Remote;

import javax.ejb.Stateless;

import org.ow2.cmi.annotation.Cluster;

import org.ow2.cmi.annotation.Policy;

import org.ow2.cmi.lb.policy.RoundRobin;

import org.ow2.easybeans.api.bean.EasyBeansBean;

@Stateless

@Remote(ClusterRemote.class)

@Cluster(name="test_cluster")

@Policy(RoundRobin.class)

public class ClusterBeanAN implements ClusterRemote {

private String ezbServerDescription = null;

public String getEZBServerDescription(final String msg) {

if(this.ezbServerDescription == null) {

this.ezbServerDescription =

((EasyBeansBean) this).getEasyBeansFactory().getContainer().getConfiguration().getEZBServer().getDescription();

}

System.out.println(msg);

return this.ezbServerDescription + "\n";

}

}

The default clustering configuration can be overridden by a specific deployment descriptor.

The file is named easybeans.xml and may contain the cluster:cluster element

with the following entries. Refer to Section 3.2.2.1.3.1, “Overview” for a precise information about the parameters.

| Element | Description |

| cluster:cluster/name | cluster name |

| cluster:cluster/pool | pool configuration with the following sub-elements

|

| cluster:cluster/policy | load-balancing policy |

| cluster:cluster/strategy | load-balancing strategy |

| cluster:cluster/properties | parameters for a customized user load-balancing algorithm

Example:

<cluster:cluster>

...

<properties>

<simple-property name="prop1" value="val1" />

<simple-property name="prop2" value="38" />

<array-property name="prop3">

<value>true</value>

</array-property>

<array-property name="prop4">

<value>java.util.LinkedList</value>

<value>java.util.ArrayList</value>

</array-property>

<array-property name="prop5">

<value>http://carol.ow2.org</value>

</array-property>

</properties>

</cluster:cluster>

|

Example:

Example 3.12. EJB3 deployment descriptor for clustering

<easybeans xmlns="http://org.ow2.easybeans.deployment.ejb"

xmlns:cluster="http://org.ow2.cmi.info.mapping">

<ejb>

<session>

<ejb-name>clusterXMLBean</ejb-name>

<cluster:cluster name="easybeans-cmi">

<cluster:policy>org.ow2.cmi.lb.policy.FirstAvailable</cluster:policy>

<cluster:strategy>org.ow2.cmi.lb.strategy.LocalPreference</cluster:strategy>

<pool>

<max-size>10</max-size>

<max-waiters>15</max-waiters>

<timeout>2000</timeout>

</pool>

</cluster:cluster>

</session>

</ejb>

</easybeans>

EJB clustering can be transparent for the client so that a client which is connected to a standalone server can be switched to a clustering mode without any configuration changes.

However either for disabling CMI and for ensuring the JNDI availability, a CMI configuration can be required.

Depending on the type of the client, the configuration is retrieved from:

| Type of client | Location of the configuration file |

| Java client | Pointed by the cmi.conf.url java property.

Example:

java -jar myclient.jar -cp<...> -Dcmi.conf.url=/tmp/cmi-config.xml

|

| JOnAS client (jclient) | Pointed by the cmi.conf.url java property. |

| Web tier client | In $JONAS_BASE/conf/cmi-config.xml file. |

If the server side is configured with CMI, by default, the CMI client will switch to the cluster mode and will perform a load-balancing. For administration purpose or application requirements as well, one may want to enforce the client not to use the clustering mode. There are two different settings for doing that:

| Setting | Description and example |

| Java property | The cmi.disabled java property must be set to true.

jclient -jar myclient.jar -Dcmi.disabled=true

|

| CMI configuration file | The client part of the configuration file must contain the noCmi attribute set to true.

<cmi xmlns="http://org.ow2.cmi.controller.common"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<client noCmi="true" />

</cmi>

|

CAROL is a the JOnAS's protocol abstract layer

and the carol.properties indicates the default protocol and the registry URL.

The client retrieves the cluster view from the server side. By default it gets in

touch with the server identified by the CAROL configuration.

For ensuring high availability of the service, a list of cluster view manager URLs can be provided to the client

through the CMI configuration file. Refer to Section 3.2.2.1.1, “cmi service configuration” for a precise information

about the setting.

Example:

Example 3.13. CMI configuration at the client side

<cmi xmlns="http://org.ow2.cmi.controller.common"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<client noCmi="false">

<jndi>

<protocol name="jrmp">

<providerUrls>

<providerUrl>rmi://localhost:1099</providerUrl>

<providerUrl>rmi://localhost:2001</providerUrl>

</providerUrls>

</protocol>

</jndi>

</client>

</cmi>

A first available policy with local preference is applied for selecting a provider URL. If the primary gets unavailable, a secondary is selected randomly at fail-over.

![[Note]](../../resources/images/note.png) |

Note |

|---|---|

In the case of a web tier which acts as a CMI client (CMI service with client mode), a list of provider URLs must be

specified in the $JONAS_BASE/conf/cmi-config.xml file.

|

|

Use case : fits with the most use and is the default configuration. A CMI view manager is started in each node.

|

Use case : when a local view manager is not acceptable regarding the resources consumptions or the network configuration.

The setting of an EJB distribution is achieved by

- configuring the CMI service

- configuring the registry distribution

The EJB application doesn't need to be modified.

The High Availability (HA) service is required in JOnAS in order to replicate SFSBs. The HA service must be included in the list of available services in JOnAS. This is done in the jonas.properties file located in $JONAS_BASE/conf.

... jonas.services registry,jmx,jtm,db,dbm,security,wm,wc,resource,cmi,ha,ejb2,ejb3,ws,web,ear,depmonitor ...

The HA service must also be configured in the jonas.properties file:

The ha (High Availability) service is required in order to replicate stateful session beans (SFSBs).

The ha service uses JGroups as a group communication protocol (GCP).

Here is the part of jonas.properties related to

ha service:

###################### JOnAS HA service configuration # # Set the name of the implementation class of the HA service. jonas.service.ha.class org.ow2.jonas.ha.internal.HaServiceImpl # Set the JGroups configuration file name jonas.service.ha.jgroups.conf jgroups-ha.xml# Set the JGroups group name jonas.service.ha.jgroups.groupname jonas-rep

# Set the SFSB backup info timeout. The info stored in the backup node is removed when the timer expires. jonas.service.ha.gc.period 600

# Set the datasource for the tx table jonas.service.ha.datasource jdbc_1

# Reconnection timeout for JGroups Channel, if it's closed on request. jonas.service.ha.reconnection.timeout 5000

|

Set the name of the JGroups configuration file. |

|

|

Set the name of the JGroups group. |

|

|

Set the period of time (in seconds) the system waits before cleaning useless replication information. |

|

|

Set the JNDI name of the datasource corresponding to the database where is located the transaction table used by the replication mechanism. |

|

|

Set the delay to wait for a reconnection. |

![[Note]](../../resources/images/note.png) |

Note |

|---|---|

|

Refer to the clustering guide for issues related to JGroups. |

The HA service uses JGroups as a group communication layer (GCL). JGroups behavior is specified by means of a stack of properties configured through an XML file (See JGroups documentation for more information: http://www.jgroups.org). The default configuration of the HA service uses the $JONAS_BASE/conf/jgroups-ha.xml file and the jonas-rep group name. The HA service can be told to use a particular stack configuration or a particular group name by modifying the following parameters:

... jonas.service.ha.jgroups.conf jgroups-ha.xml jonas.service.ha.jgroups.groupname jonas-rep ...

The horizontal replication algorithm uses a database table to keep track of current running transactions. This table is accessed from the new elected node during fail-over to detect whether or not the current transaction has committed at the former local node, ensuring exactly-once semantics. The table contains only one column: the transaction identifier (txid).

In JOnAS 4.8 this table must be created manually with the following SQL command:

create TABLE ha_transactions (txid varchar(60));

This table should be located preferably in the database used by the replicated application, but it is not mandatory. If the table is not created in the database used by the replicated application, it is necessary to configure a new datasource for the database that contains this transaction table. This datasource must be configured to use the serializable transaction isolation level.

The database that holds the transaction table is accessed by the replication service with the JNDI name configured in jonas.properties.

... jonas.service.ha.datasource tx_table_ds ...

Due to the fact that the replication algorithm stores information associated with clients' transactions and that the server is not notified when a client dies, the HA service might have been storing unnecessary replication information over time. In order to automatically clean this unnecessary replication information, the HA service includes a garbage collection mechanism. It is possible to configure the number of seconds the system waits to execute this mechanism by changing the following property in the jonas.properties file:

... jonas.service.ha.timeout 600 ...

In order to configure an application for replication, the <cluster-replicated/> element must be added to the bean definition of every bean requiring high availability in the jonas-ejb-jar.xml deployment descriptor file. This element can have two possible values: true or false (default value). In addition, if the programmer wants to change the behavior of the CMI proxy (e.g., the server selection policy), it is possible to specify different policy implementations by means of <cluster-config/> elements.

The following is an example description for a replicated SFSB in jonas-ejb-jar.xml file:

Example 3.14. EJB2.1 deployment descriptor for session replication

...

<jonas-session>

<ejb-name>DummySFSB</ejb-name>

<jndi-name>DummySFSB</jndi-name>

...

<cluster-replicated>true</cluster-replicated>

<cluster-config>

<name>jonas-cluster</name>

<policy>org.ow2.cmi.lb.policy.RoundRobin</policy>

<strategy>org.ow2.cmi.lb.strategy.LocalPreference</strategy>

<pool>

<max-size>10</max-size>

<max-waiters>15</max-waiters>

<timeout>2000</timeout>

</pool>

</cluster-config>

<is-modified-method-name>true</is-modified-method-name>

</jonas-session>

...

The <cluster-replicated/> element can also be set in the SSB or EB for

enabling the transaction checking mechanism ensuring the exactly-once semantic at fail-over

supporting the EB references replication

Note: When set in the SSB, the mechanism inhibits the load-balancing at the remote interface. After the home create() method call, all the requests are sent to the same instance.

The lock policy for the Entity Beans in a replicated application must be configured as database in the jonas-ejb-jar.xml deployment descriptor file.

The following is an example description for a replicated EB, i.e. an entity that is accessed from a replicated SFSB, in the jonas-ejb-jar.xml:

...

<jonas-entity>

<ejb-name>MyEntitySLR</ejb-name>

<jndi-name>MyEntityHome</jndi-name>

<cluster-replicated>true</cluster-replicated>

<shared>true</shared>

<jdbc-mapping>

<jndi-name>example_ds</jndi-name>

</jdbc-mapping>

<lock-policy>database</lock-policy>

</jonas-entity>

...

The datasources used by replicated applications must be configured to use the serializable transaction isolation level.

The following is an example for a datasource configuration file for the Postgres DBMS:

... datasource.name example_ds datasource.url jdbc:postgresql://xxx.xxx.xxx.xxx:xxxx/database datasource.classname org.postgresql.Driver datasource.username jonas datasource.password datasource.mapper rdb.postgres datasource.isolationlevel serializable ...

Finally, when compiling the application that includes the replicated beans, the CMI protocol must be specified in order to generate the classes that include the replication logic.

As for the farming, the cluster mode can be transparent for the client configuration apart for the expected high availability of the CMI internals service. In particular the CMI cluster view manager client part must be configure with a list of provider urls in order to be able to take-over when a server node failure occurs. Refer to Section 3.2.2.2, “At the client side” for a precise information about the setting.

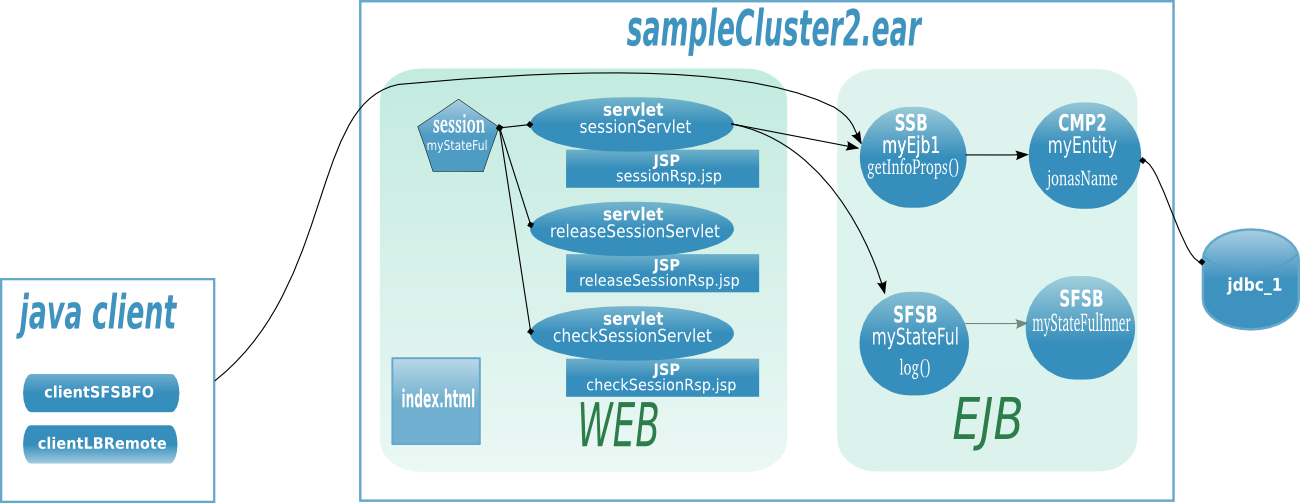

The JMS API provides a separate domain for each messaging approach, point-to-point or publish/subscribe. The point-to-point domain is built around the concept of queues, senders and receivers. The publish/subscribe domain is built around the concept of topic, publisher and subscriber. Additionally it provides a unified domain with common interfaces that enable the use of queue and topic. This domain defines the concept of producers and consumers. The classic sample provided with JOnAS ($JONAS_ROOT/examples/javaee5-earsample) uses a very simple configuration (centralized) made of one server hosting a queue and/or a topic. The server is administratively configured for accepting connection requests from the anonymous user.

JMS clustering aims to offer a solution for both the scalability and the high availability for the JMS accesses. This chapter gives an overview of the JORAM capabilities for clustering a JMS application in the Java EE context. The load-balancing and fail-over mechanisms are described and a user guide describing how to build such a configuration is provided. Further information is available in the JORAM documentation here .

The following information will be presented:

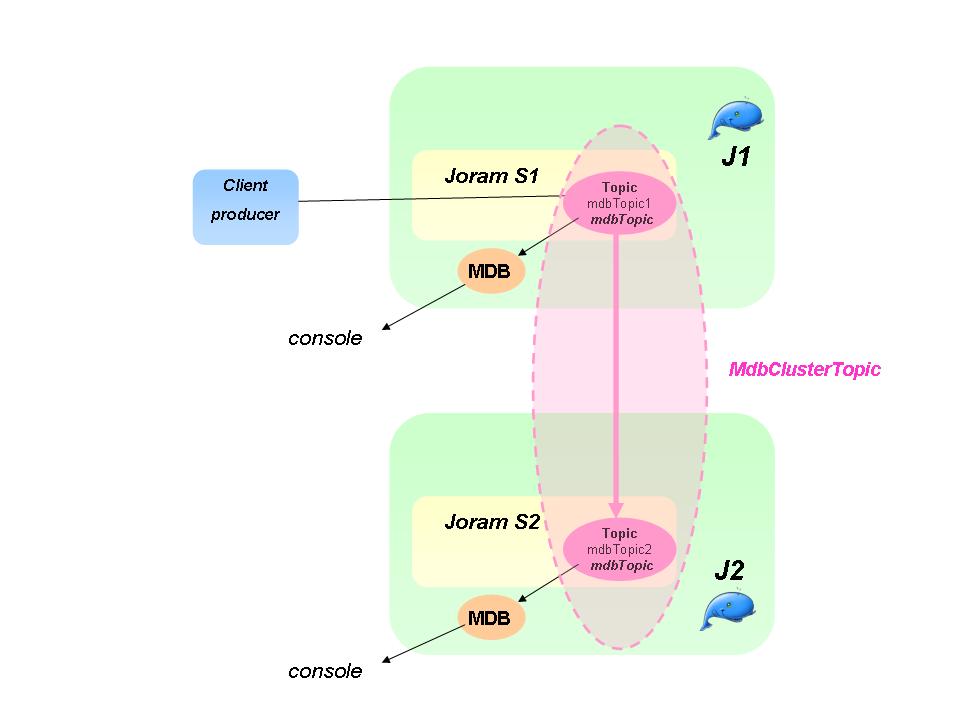

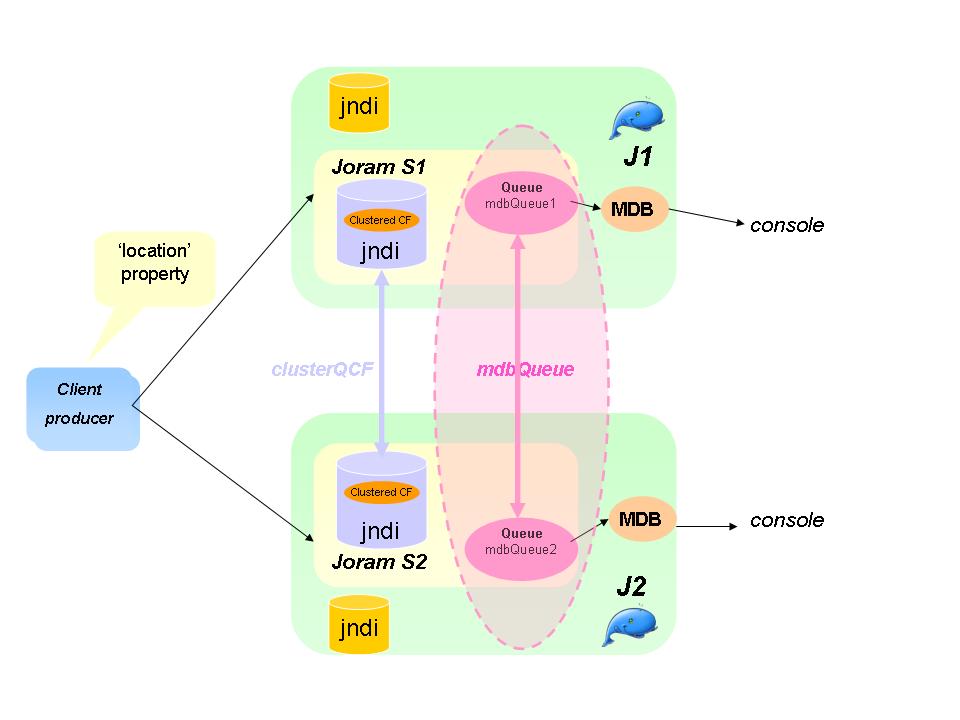

first, section Section 3.3.2, “Example” presents the example that will be used in this section about JMS cluster.

then, Section 3.3.3, “Load balancing” details load balancing throw cluster topic and cluster queue. The distributed capabilities of JORAM will be detailed as well.

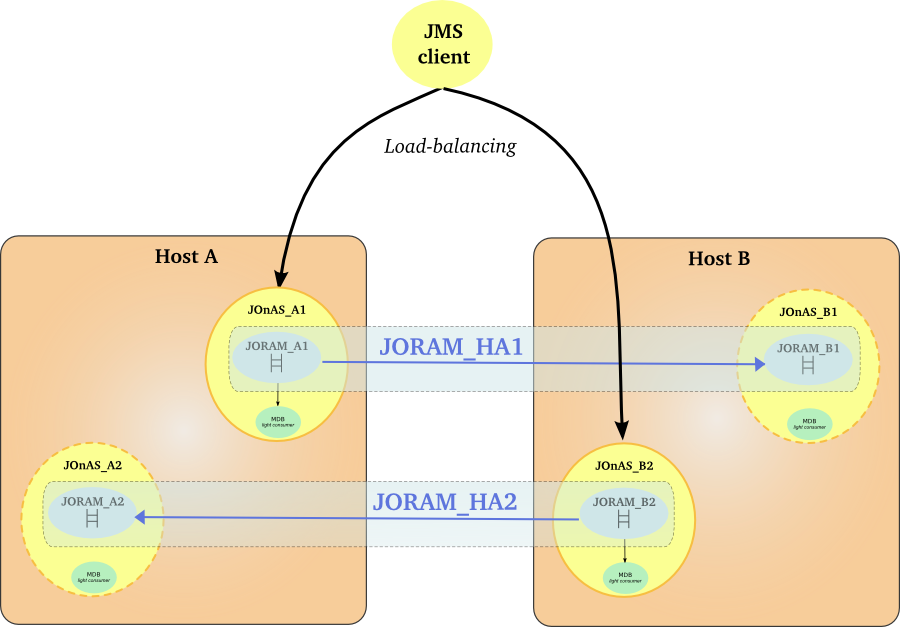

Section 3.3.4, “JORAM HA and JOnAS” describes how to configure the JORAM HA enabling to ensure the high availability of the JORAM server.

Section 3.3.5, “MDB Clustering” introduces how to build an MDB clustering architecture with both JOnAS and JORAM.

The documentation provides an example of JMS cluster with 2 members and a MDB application.